The evolution of computing technology has led to diverse computer systems categorized by their memory architectures. These classifications reflect how memory is utilized, managed, and optimized for specific tasks. Understanding these categories helps in selecting the right system for applications ranging from everyday computing to specialized industrial use.

Volatile vs. Non-Volatile Memory Systems

Computers are often distinguished by their reliance on volatile or non-volatile memory. Volatile memory, such as RAM (Random Access Memory), requires constant power to retain data. Systems prioritizing speed—like personal computers and servers—use volatile memory for temporary data storage during active tasks. In contrast, non-volatile memory (e.g., SSDs, ROM) retains data without power, making it ideal for long-term storage. Embedded systems, IoT devices, and firmware-dependent machines often leverage non-volatile memory to ensure persistence and reliability.

Specialized Memory-Centric Architectures

Certain systems are designed around memory hierarchies to address specific workloads. For example, supercomputers employ high-bandwidth memory (HBM) and cache-coherent architectures to handle complex simulations. Real-time systems, such as those in aviation or medical devices, prioritize deterministic memory access to meet strict timing requirements. Another emerging category is in-memory computing, where data processing occurs directly within memory units. This approach minimizes latency and is gaining traction in AI training and big data analytics.

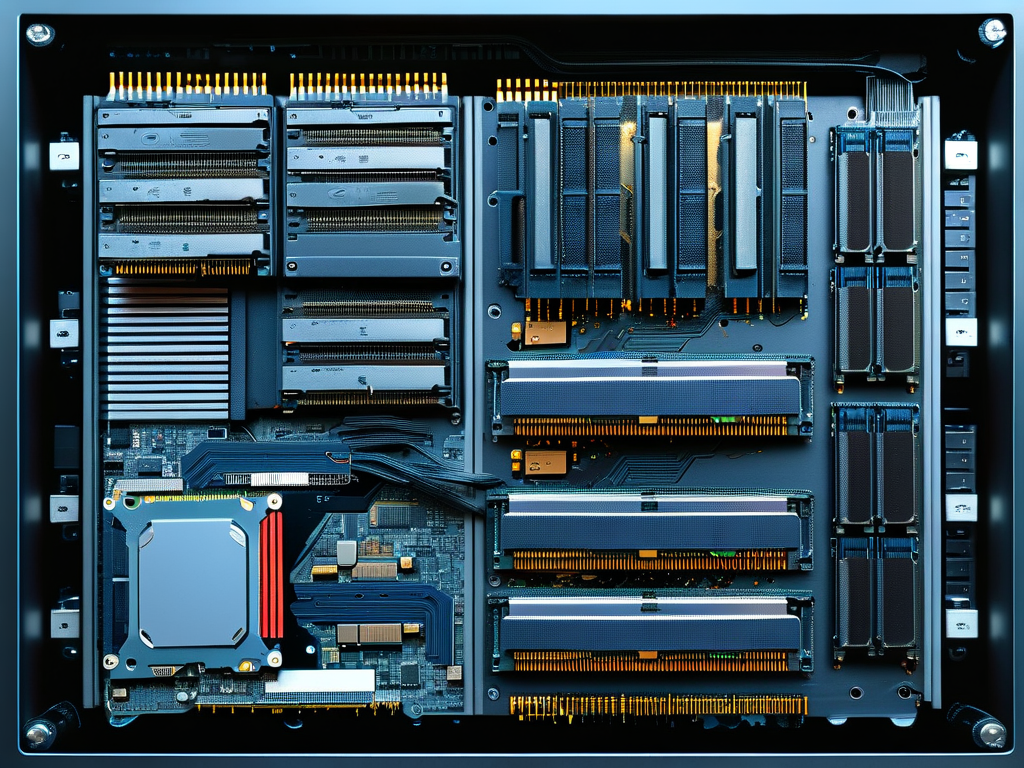

The Role of Memory in Heterogeneous Systems

Modern heterogeneous computing systems integrate multiple memory types to balance performance and efficiency. GPUs, for instance, combine GDDR6 memory for high-speed rendering with shared system memory for general computations. Similarly, edge computing devices use layered memory pools—combining SRAM for quick access and flash storage for durability—to operate efficiently in resource-constrained environments. These hybrid models highlight the adaptability of memory-centric designs.

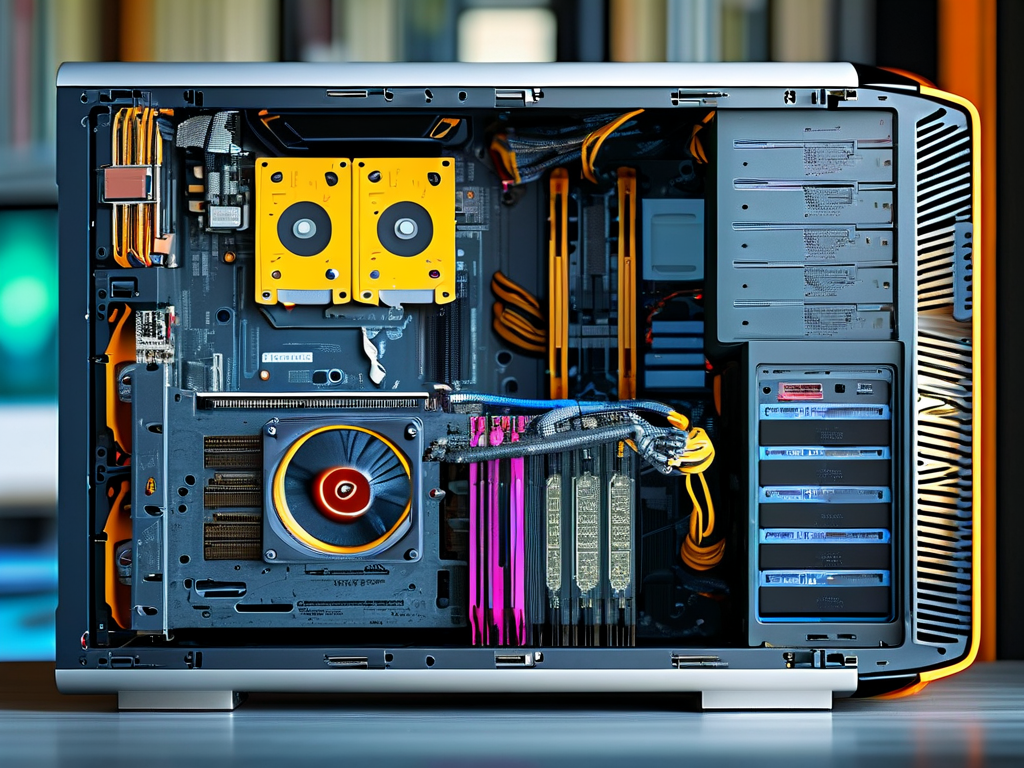

Impact of Memory Management Techniques

Advanced memory management strategies further define computer types. Virtual memory systems, common in desktop and server environments, allow applications to operate as if they have contiguous memory space, even if physical memory is fragmented. Conversely, bare-metal systems (e.g., microcontrollers) forgo virtual memory to reduce overhead, relying instead on direct physical addressing. Garbage-collected languages like Java influence system design by automating memory allocation, whereas low-level systems (C/C++-based) require manual management for precision.

Future Trends in Memory-Driven Computing

Innovations like quantum RAM (qRAM) and neuromorphic memory are reshaping classifications. Quantum computers use qRAM to exploit superposition for parallel data access, while neuromorphic systems mimic synaptic plasticity for adaptive learning. Additionally, non-volatile memory express (NVMe) over Fabrics extends memory pooling across networks, enabling distributed systems to share storage resources seamlessly. These advancements suggest a future where memory classification becomes even more granular, blending hardware capabilities with software-defined flexibility.

In summary, memory-based computer classifications span volatile/non-volatile designs, specialized architectures, heterogeneous integrations, and management paradigms. As technology advances, these categories will continue to evolve, driven by demands for speed, scalability, and energy efficiency. Understanding these distinctions is critical for optimizing system performance across industries.