In the architecture of computer systems, memory classification forms the backbone of performance optimization and functional design. Unlike a one-size-fits-all approach, modern computing relies on a hierarchy of memory types, each tailored to specific tasks. This layered structure ensures efficiency, speed, and reliability – critical factors for applications ranging from mobile devices to enterprise servers.

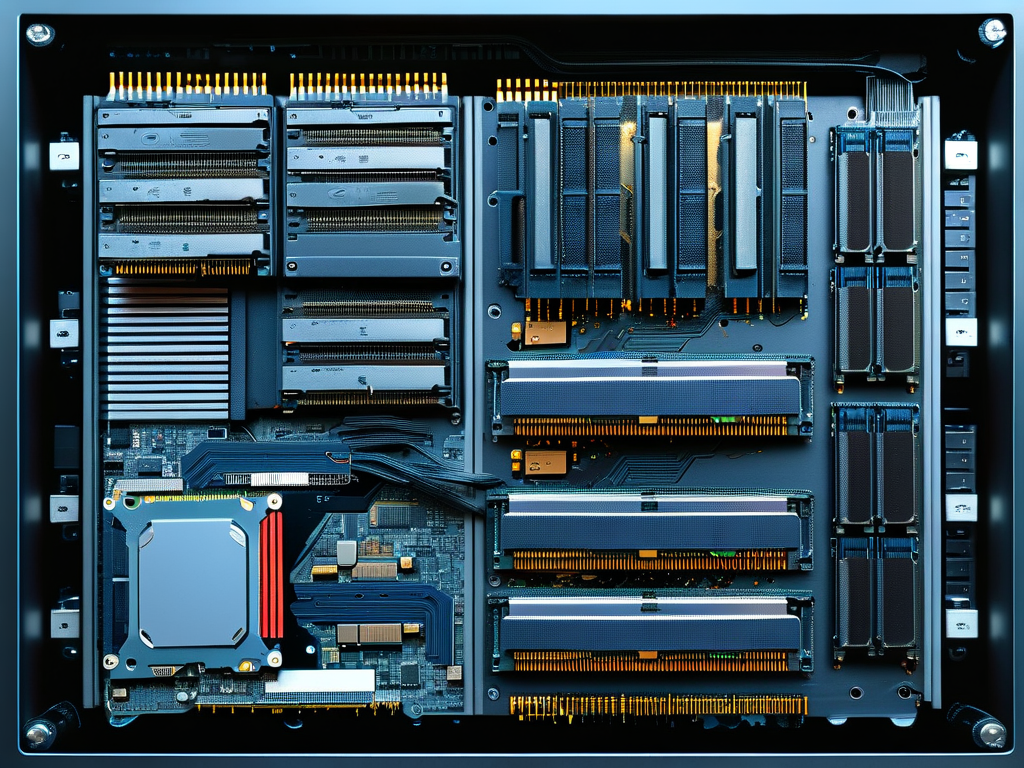

Primary Memory: The Speed Catalyst

At the core of this hierarchy lies primary memory, commonly referred to as Random Access Memory (RAM). RAM operates as a volatile storage medium, temporarily holding data that the CPU actively processes. For instance, when editing a document, the text you see resides in RAM until saved permanently. Modern DDR5 RAM modules achieve transfer rates exceeding 6,400 Mbps, enabling real-time data access for multitasking. However, volatility remains its limitation – power loss erases all stored information.

Secondary Storage: Persistent Data Guardians

Non-volatile memory solutions like Solid-State Drives (SSDs) and Hard Disk Drives (HDDs) serve as long-term repositories. While slower than RAM, these storage types retain data without power. A 2023 study revealed that NVMe SSDs now deliver read speeds up to 7,000 MB/s, blurring the line between traditional storage and memory performance. Hybrid systems often combine SSDs for speed with HDDs for cost-effective bulk storage.

Cache Memory: The CPU's Secret Weapon

Nestled closest to the processor, cache memory operates at nanometer-scale latencies. Three-tier cache architectures (L1, L2, L3) work in concert:

// Simplified cache access logic

if (L1_hit) { access_time = 1ns; }

else if (L2_hit) { access_time = 4ns; }

else { access_time = 10ns (L3); }

This tiered approach reduces data retrieval delays, with L1 cache typically integrated directly into CPU cores.

Emerging Memory Technologies

The memory landscape continues evolving with innovations like 3D XPoint (Optane) and Resistive RAM (ReRAM). These technologies promise non-volatile characteristics with near-RAM speeds, potentially revolutionizing system architectures. Google’s Tensor Processing Units already employ specialized memory configurations to accelerate machine learning workloads by 15x compared to traditional setups.

Classification by Functionality

Memory systems also differentiate by operational roles:

- Volatile vs. non-volatile

- Static (SRAM) vs. dynamic (DRAM)

- Sequential vs. random access

Such classifications guide engineers in optimizing hardware-software interactions.

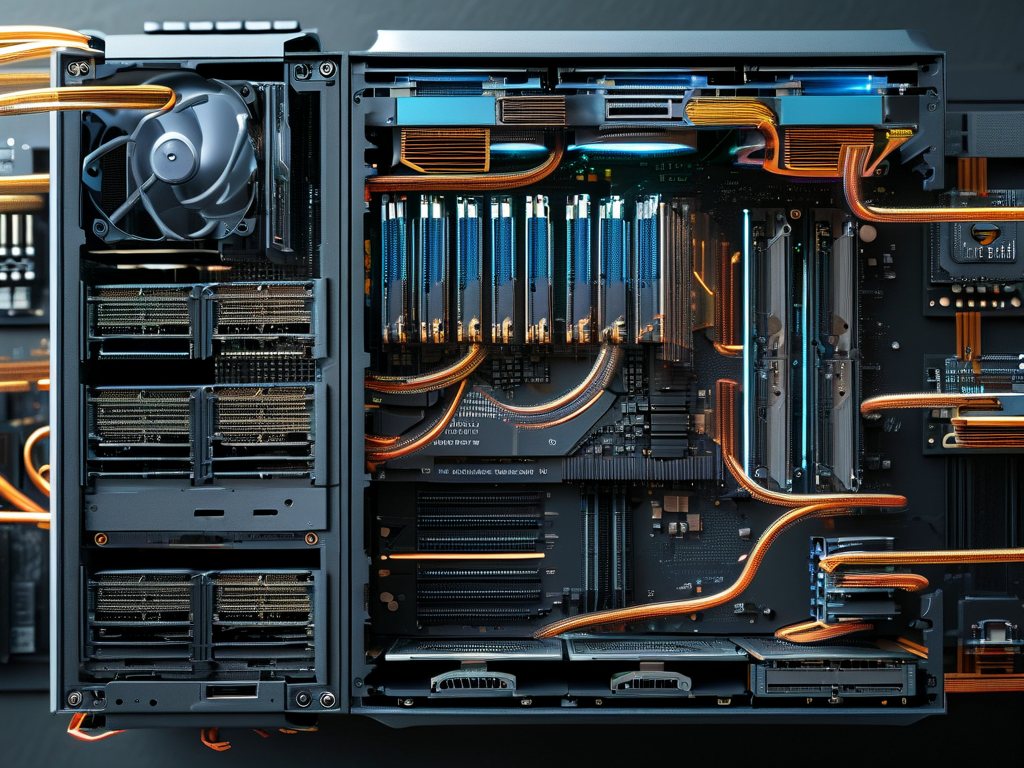

Impact on System Design

Memory allocation strategies directly affect application performance. Video editing software, for example, requires careful balancing between GPU memory, system RAM, and scratch disk space. The Windows Memory Manager employs paging files to extend virtual memory, while Linux uses swap partitions – both mechanisms demonstrate how OS design adapts to physical memory constraints.

As quantum computing and neuromorphic architectures emerge, memory systems face new challenges. Samsung’s recent prototype of Compute Express Link (CXL) memory demonstrates how future systems might eliminate bottlenecks through memory pooling and hardware-level coherence protocols.

In , understanding memory classification isn’t just academic – it’s essential for optimizing system performance, reducing energy consumption, and enabling next-generation applications. From the nanoscale circuits in cache memory to petabyte-scale storage farms, each memory type plays a distinct role in the digital ecosystem.