When discussing the inner workings of computers, understanding main memory is fundamental. Often referred to as primary storage, it serves as the immediate workspace for the processor, enabling rapid data access during operations. This article explores the core elements that make up computer main memory, their roles, and how they collaborate to support computing tasks.

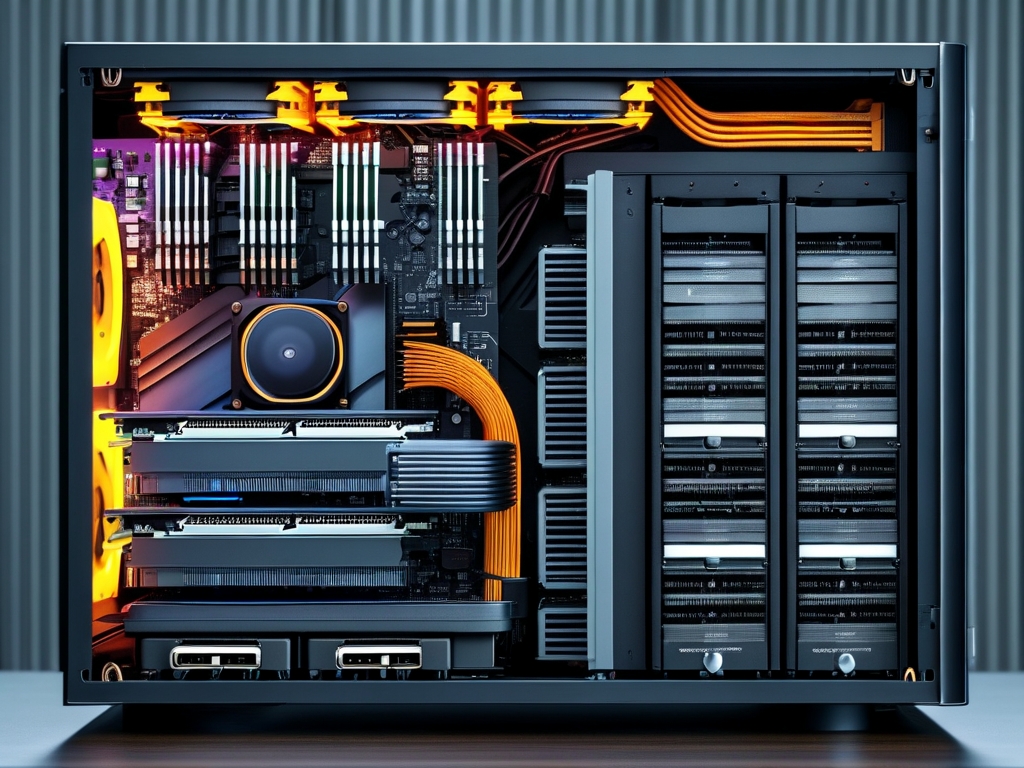

Random Access Memory (RAM)

RAM is the most well-known component of main memory. It temporarily stores data and instructions that the CPU needs while performing tasks. Unlike permanent storage devices, RAM is volatile, meaning it loses its contents when power is disconnected. There are two primary types:

- Dynamic RAM (DRAM): Widely used in personal computers, DRAM requires constant refreshing to retain data. Its high density and lower cost make it suitable for bulk memory needs.

- Static RAM (SRAM): Faster and more power-efficient than DRAM, SRAM doesn’t need refreshing. However, its higher cost and larger physical size limit its use to specialized applications like CPU cache.

Modern systems often use DDR4 or DDR5 RAM variants, which improve bandwidth and energy efficiency. For example, a gaming PC might employ 32GB of DDR5 RAM to handle resource-intensive applications seamlessly.

Read-Only Memory (ROM)

ROM is non-volatile memory that retains data even without power. It stores firmware—prewritten instructions critical for booting up devices. Variations include:

- PROM (Programmable ROM): Customizable once using specialized equipment.

- EPROM (Erasable PROM): Can be erased via ultraviolet light and reprogrammed.

- EEPROM (Electrically Erasable PROM): Allows data modification through electrical signals, commonly used in BIOS updates.

A practical example is a computer’s BIOS chip, which uses EEPROM to store startup routines. Unlike RAM, ROM isn’t meant for frequent writes, ensuring system stability.

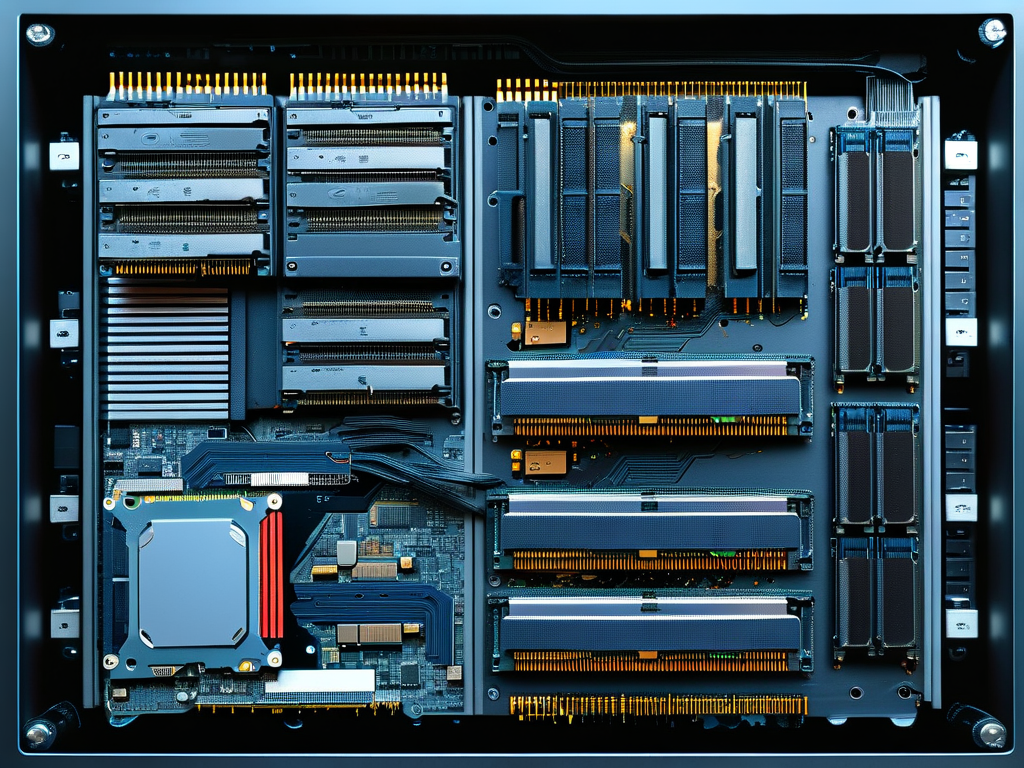

Cache Memory

Positioned between the CPU and RAM, cache memory acts as a high-speed buffer. It reduces latency by storing frequently accessed data. Most CPUs integrate three cache levels:

- L1: Smallest and fastest, built directly into the processor core.

- L2: Larger than L1 but slightly slower, often shared between cores.

- L3: The largest cache layer, shared across all cores to optimize multi-threaded performance.

For instance, Intel’s Core i9 processors feature up to 36MB of L3 cache, accelerating complex computations in video editing software.

Virtual Memory

While not physical hardware, virtual memory extends RAM capacity by using disk space as temporary storage. The operating system manages this through paging files, swapping less-used data to the hard drive. This mechanism allows systems with limited RAM to run larger applications, albeit at slower speeds. A user editing 4K video might notice lag if the system relies heavily on virtual memory due to insufficient physical RAM.

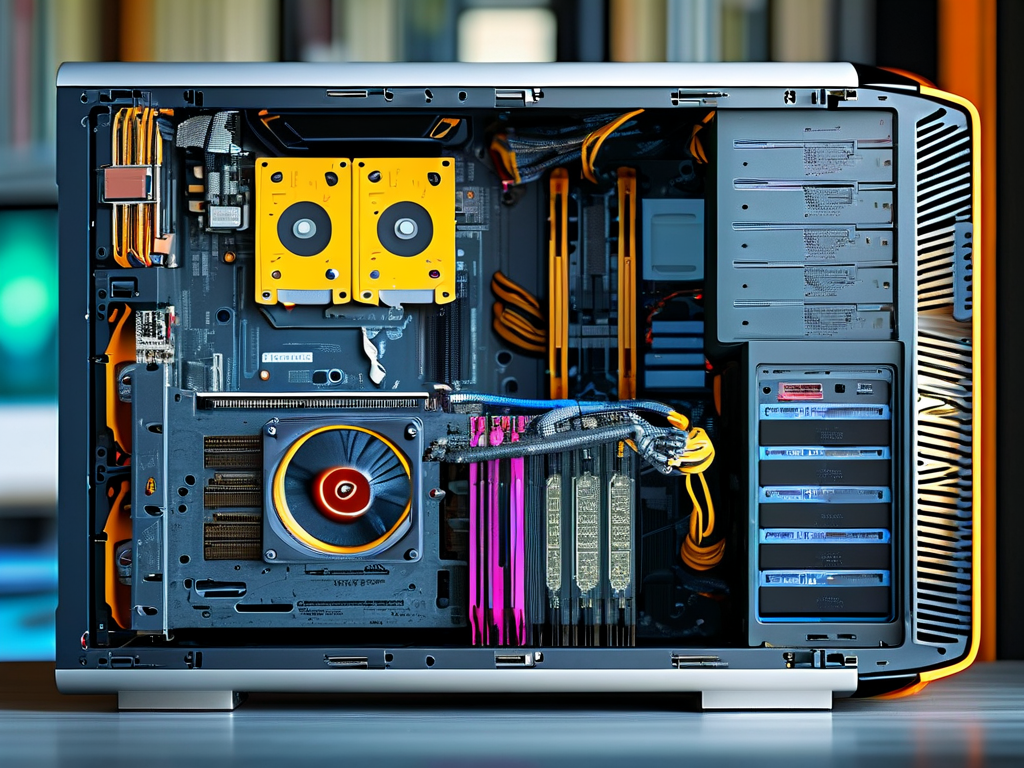

Memory Hierarchy and Performance

The efficiency of a computer relies on the memory hierarchy—a tiered structure balancing speed, cost, and capacity. At the top, cache and RAM provide swift access, while secondary storage (e.g., SSDs) offers permanence. For optimal performance, developers optimize software to prioritize cache utilization. Overclocking RAM modules is another tactic enthusiasts use to boost speed, though it risks system instability if not done carefully.

Emerging Technologies

Advancements like 3D XPoint (used in Intel Optane) blur the line between RAM and storage by offering non-volatile, near-RAM speeds. Meanwhile, LPDDR5 targets mobile devices with enhanced power efficiency. Researchers also explore MRAM (Magnetoresistive RAM), which combines speed with non-volatility, potentially revolutionizing future memory architectures.

In summary, computer main memory comprises RAM, ROM, cache, and virtual memory systems. Each component plays a distinct role in data handling, from instantaneous processing to long-term firmware storage. As technology evolves, these elements continue to adapt, driving innovations in computing power and efficiency.