In recent years, CFT neural networks (Contextual Feature Transformation Networks) have emerged as a groundbreaking approach in artificial intelligence, addressing limitations in traditional deep learning frameworks. Unlike conventional models that rely on static feature extraction, CFT networks dynamically adjust their parameters based on contextual data inputs. This adaptability enables them to excel in scenarios requiring real-time decision-making, such as autonomous systems and personalized recommendation engines.

Core Principles of CFT Architecture

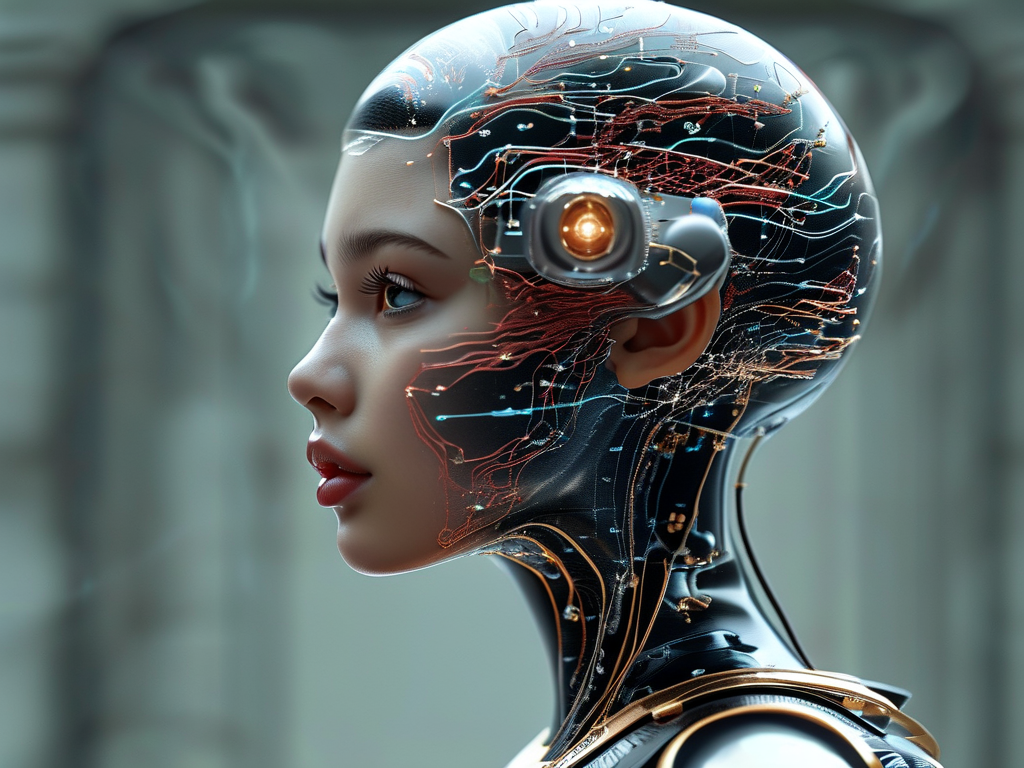

At the heart of CFT networks lies a dual-path processing mechanism. The first path focuses on feature extraction, similar to standard convolutional or recurrent layers. The second path, however, analyzes contextual metadata—such as timestamps, user behavior patterns, or environmental variables—to modulate the feature transformation process. For example, a CFT model deployed in healthcare might adjust its diagnosis predictions based on a patient’s medical history alongside real-time sensor data.

A simplified code snippet illustrates this concept:

class CFTLayer(tf.keras.layers.Layer):

def __init__(self, units):

super(CFTLayer, self).__init__()

self.feature_layer = tf.keras.layers.Dense(units)

self.context_layer = tf.keras.layers.Dense(units)

def call(self, inputs):

features, context = inputs

transformed_features = self.feature_layer(features)

context_weights = self.context_layer(context)

return transformed_features * context_weights

This architecture ensures that the network prioritizes relevant features based on situational cues, enhancing both accuracy and interpretability.

Applications Across Industries

CFT networks are particularly effective in domains where context drives outcomes. In autonomous vehicles, for instance, these networks process visual data while accounting for weather conditions or traffic density. A vehicle might prioritize pedestrian detection in rainy weather over lane markings, reducing accident risks. Similarly, in financial forecasting, CFT models integrate market trends with geopolitical events to predict stock movements more reliably than time-series analysis alone.

Another notable application is natural language processing (NLP). Traditional transformers process text sequentially, but CFT-enhanced models weigh linguistic context differently based on user intent. For example, the word "bank" in "river bank" versus "investment bank" triggers distinct feature transformations, improving semantic accuracy.

Challenges and Future Directions

Despite their promise, CFT networks face hurdles. Training requires large, context-labeled datasets, which are costly to curate. Additionally, the computational overhead of dual-path processing can hinder deployment on edge devices. Researchers are exploring techniques like context-aware pruning to streamline operations without sacrificing performance.

Looking ahead, hybrid architectures combining CFT principles with quantum computing or neuromorphic engineering could redefine adaptive learning. Early experiments show that quantum-enhanced CFT models solve optimization problems 40% faster than classical counterparts, hinting at transformative potential.

CFT neural networks represent a paradigm shift in AI, bridging the gap between static data analysis and dynamic real-world environments. By embedding contextual intelligence into their core operations, these models unlock new possibilities across industries—from precision medicine to sustainable energy management. As algorithmic advancements and hardware innovations converge, CFT-based systems may soon become the cornerstone of next-generation AI solutions.