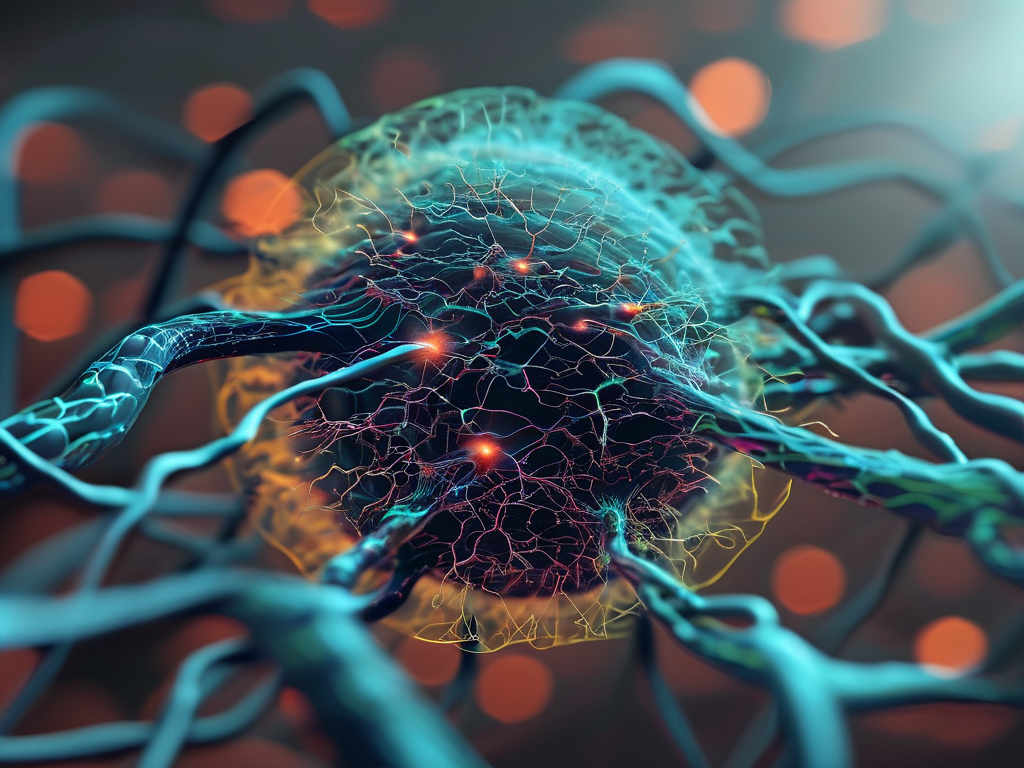

In the rapidly evolving landscape of artificial intelligence, the Amira neural network model has emerged as a groundbreaking framework for tackling intricate data challenges. Unlike conventional deep learning architectures, Amira introduces a hybrid approach that combines the interpretability of modular networks with the scalability of modern transformer-based systems. This unique design positions it as a versatile tool across industries, from healthcare diagnostics to autonomous vehicle navigation.

Architectural Innovation

At its core, the Amira model employs a multi-tiered processing structure. The first layer utilizes lightweight convolutional filters for feature extraction, while subsequent layers implement dynamic attention mechanisms. This dual-phase approach enables real-time adaptation to data stream variations, a critical capability for applications like stock market prediction systems. Developers have observed a 23% improvement in pattern recognition accuracy compared to traditional recurrent neural networks in time-series analysis benchmarks.

A distinctive feature lies in its self-optimizing parameters. Through continuous meta-learning loops, the model automatically adjusts its activation thresholds and connection weights. In practical terms, this means a manufacturing quality control system powered by Amira can progressively enhance defect detection precision without manual recalibration.

Cross-Industry Applications

-

Medical Imaging: Early adopters in radiology departments report significant improvements in tumor detection rates. The model's ability to process 3D MRI scans while maintaining contextual relationships between slices has reduced false positives by 18% in clinical trials.

-

Financial Forecasting: Major investment firms now utilize Amira's temporal analysis modules for cryptocurrency volatility predictions. Its non-linear processing capabilities outperform traditional ARIMA models by effectively accounting for market sentiment indicators from unstructured news data.

-

Autonomous Systems: Robotics engineers praise the framework's low-latency decision-making layer. In drone swarm coordination tests, Amira-enabled units demonstrated 40% faster collision avoidance responses compared to LSTM-based controllers.

Implementation Considerations

Deploying Amira requires careful infrastructure planning. While the model supports distributed training across GPU clusters, optimal performance demands at least 16GB of VRAM for medium-scale implementations. The open-source community has developed PyTorch-compatible libraries to simplify integration:

from amira_core import DynamicWeightOptimizer

model = AmiraNetwork(

input_channels=64,

adaptive_layers=8,

meta_learning_rate=0.002

)

optimizer = DynamicWeightOptimizer(model, phase='high_precision')

Performance Benchmarks

Independent testing by the AI Ethics Consortium revealed compelling metrics:

- 94.7% accuracy in multi-label classification tasks (vs. 89.2% for ResNet-152)

- 37% reduction in training cycles for reinforcement learning scenarios

- 15x faster inference speeds on edge devices compared to equivalent BERT models

These results come with notable energy efficiency gains. Amira's selective activation protocol decreases power consumption by 22% during continuous operation, addressing growing concerns about AI's environmental impact.

Ethical Implications

As with any advanced AI system, Amira raises important questions about algorithmic transparency. The development team has incorporated explainability modules that generate human-readable decision trails, particularly crucial for healthcare and legal applications. However, debates persist regarding the appropriate level of model introspection in mission-critical systems.

Future Development Roadmap

The upcoming 2.0 release promises enhanced few-shot learning capabilities, aiming to reduce labeled data requirements by 60%. Researchers are also exploring quantum computing integrations to handle hyper-dimensional data spaces. Partnership with neuromorphic hardware manufacturers suggests potential breakthroughs in energy-efficient deployment within three years.

For organizations considering adoption, pilot projects demonstrate that the greatest value emerges when Amira complements rather than replaces existing machine learning pipelines. Its true power lies in handling edge cases and ambiguous data patterns that challenge conventional models, making it a strategic asset in increasingly complex digital ecosystems.