When three robotic arms flawlessly performed Beethoven's "Ode to Joy" at Berlin's Techphonia Hall last month, it marked a new frontier in automated music performance. This article explores the hidden machinery behind modern robotic bands, revealing how engineering innovations are redefining musical expression.

At the core of any robotic band lies a sophisticated synchronization framework. Unlike human ensembles that rely on auditory cues and visual contact, mechanical musicians depend on precision time-coding. Most systems utilize modified MIDI protocols with nanosecond-level accuracy, synchronizing servo motors and pneumatic actuators across multiple instruments. The Yamaha-Disklavier integration in piano-playing robots, for instance, employs hybrid control combining PWM (Pulse Width Modulation) and torque vectoring for dynamic key strikes.

Instrument-specific actuation presents unique technical hurdles. String instruments like violins require bowing mechanisms with variable pressure control, achieved through force-sensitive piezoresistive arrays. The Tokyo-based Z-Machines project demonstrated this with their 78-fingered guitar robot, using a patented "string deflection matrix" that adjusts pick angles in real-time based on harmonic analysis.

Wind instrument automation poses different challenges. Robotic brass players like those in the Compressorhead band use compressed air systems with dynamically adjustable valves. A typical implementation involves:

def adjust_valve(pressure, note_freq):

servo_angle = (pressure * 0.8) + (note_freq * 1.2)

return max(min(servo_angle, 180), 0)

This code snippet illustrates the proportional-integral control logic balancing air pressure and valve positioning.

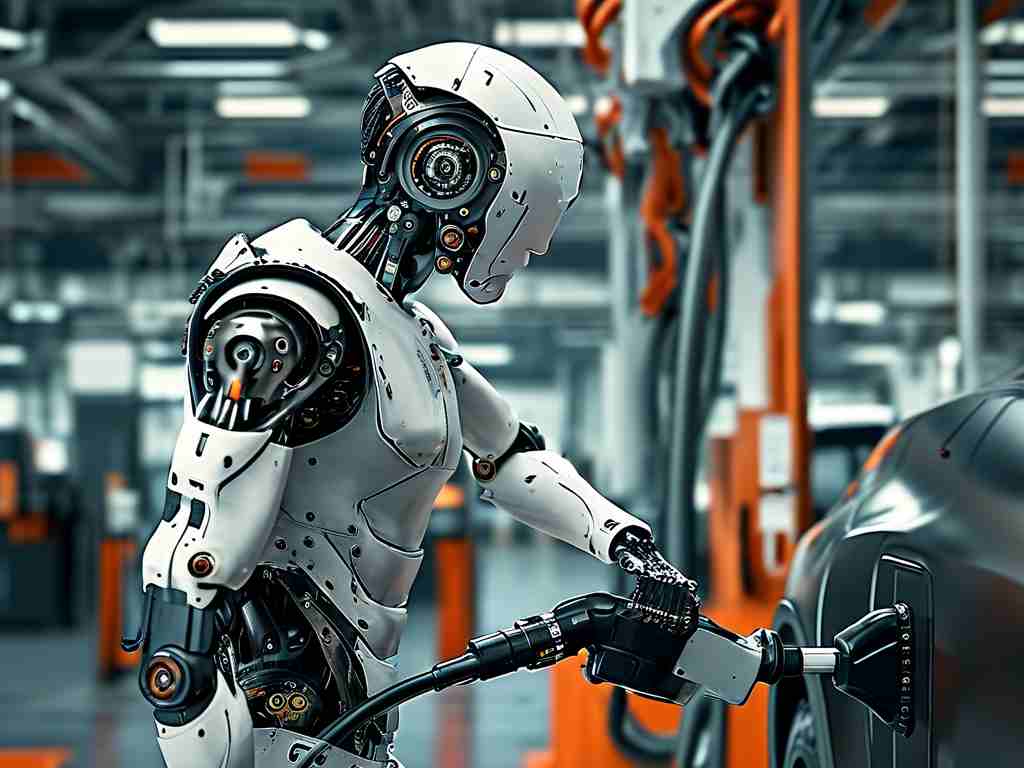

Percussion robots showcase perhaps the most visible engineering. The KUKA KR Agilus arms used in industrial automation have been adapted for drum kits, with modified end-effectors capable of handling sticks, mallets, and brushes. Their path-planning algorithms incorporate machine learning models trained on human drummer motion capture data, enabling "swing" rhythms previously considered impossible for machines.

Sensor fusion forms the nervous system of robotic bands. Multimodal systems combine MEMS accelerometers for vibration detection, optical sensors for positional feedback, and capacitive touch sensors for instrument interaction. The breakthrough came with cross-modal attention networks that process these inputs similarly to human peripheral nervous systems, allowing real-time error correction during performances.

Energy management remains a critical concern. High-torque actuators for piano key depression can draw 2-3kW during fortissimo passages. Modern systems like Festo's BionicCobot address this through regenerative braking systems that recover energy during release motions, improving efficiency by 40% compared to earlier models.

The auditory feedback loop represents the final frontier. While early robotic bands played pre-programmed scores, next-gen systems like Georgia Tech's Shimon can now improvise. Using transformer-based neural networks trained on jazz improvisation patterns, the marimba-playing robot analyzes harmonic contexts in 12ms intervals to generate original melodic lines.

Ethical debates persist regarding robotic musicians' role in creative arts. However, as the Cologne-based ARTronica project demonstrated during their 2023 European tour, human-robot collaborations can yield unprecedented musical dimensions. Their cellist robot, equipped with dynamic vibrato control, responded to human performers' tempo variations through wireless bio-signal monitoring.

From jazz clubs to symphony halls, robotic bands are not replacing human musicians but expanding music's technical vocabulary. As Stanford researchers recently noted in their paper "Robotic Ensembles and Temporal Plasticity," these systems force us to re-examine fundamental assumptions about rhythm, timbre, and collective musical intelligence. The fusion of IEC 61131-3 control languages with artistic expression continues to challenge both engineers and artists, composing a new chapter in the eternal dance between technology and creativity.