Computer memory interfaces serve as critical pathways between a computer's central processing unit (CPU) and its memory subsystems. These interfaces determine how efficiently data is transferred, stored, and retrieved, directly impacting system performance. Over the decades, memory interface technologies have evolved significantly to meet the demands of faster processors and complex applications. This article explores the major types of computer memory interfaces and their functionalities, providing insights into their roles in modern computing.

1. SDRAM Interfaces

Synchronous Dynamic Random-Access Memory (SDRAM) interfaces revolutionized memory technology by synchronizing data transfers with the CPU clock cycle. Introduced in the 1990s, SDRAM eliminated timing inconsistencies seen in earlier asynchronous DRAM. Key features include:

- Clock Synchronization: Operates in tandem with the CPU clock, enabling predictable data transfer cycles.

- Burst Mode: Transfers multiple data words in rapid succession after a single address request.

- Voltage: Typically operates at 3.3V.

SDRAM laid the groundwork for later advancements like DDR (Double Data Rate) memory.

2. DDR (Double Data Rate) Interfaces

The DDR family represents the most widely used memory interfaces in consumer and enterprise systems. Each generation—DDR1 through DDR5—doubles the data rate of its predecessor by transferring data on both the rising and falling edges of the clock signal.

- DDR1 (2000): Operated at 2.5V with speeds up to 400 MT/s (Mega Transfers per second).

- DDR2 (2003): Reduced voltage to 1.8V and introduced prefetch buffers for higher bandwidth.

- DDR3 (2007): Dropped to 1.5V and achieved speeds up to 2133 MT/s.

- DDR4 (2014): Further reduced voltage (1.2V) and introduced bank groups for parallel operations.

- DDR5 (2020): Supports up to 6400 MT/s, features dual 32-bit channels per module, and improves power management.

Functionality: DDR interfaces prioritize high bandwidth and energy efficiency, making them ideal for general-purpose computing, gaming, and servers.

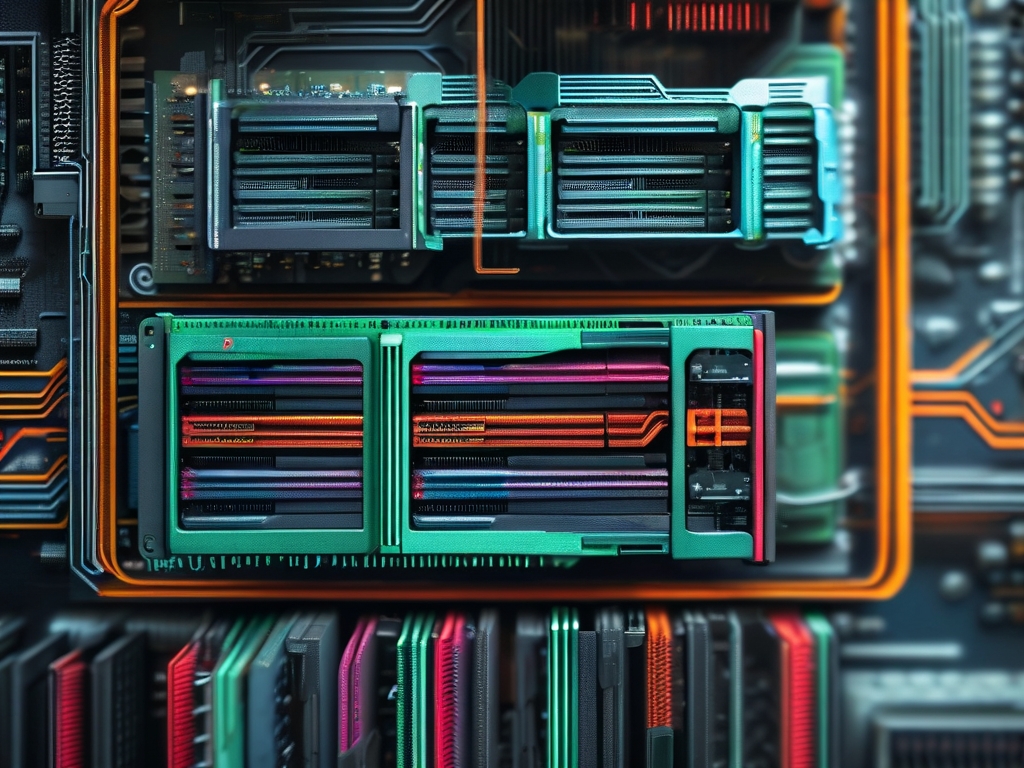

3. HBM (High Bandwidth Memory)

HBM is a cutting-edge interface designed for high-performance computing (HPC) and graphics processing units (GPUs). Unlike traditional planar memory designs, HBM stacks memory dies vertically using through-silicon vias (TSVs), enabling:

- Ultra-Wide Buses: 1024-bit interfaces per stack (vs. 64-bit in DDR).

- Reduced Latency: Shorter physical pathways between stacked layers.

- Energy Efficiency: Lower operating voltages (1.2V) and reduced signal travel distances.

HBM2 and HBM2E variants support up to 3.6 TB/s of bandwidth, making them indispensable for AI accelerators and data centers.

4. GDDR (Graphics Double Data Rate)

GDDR interfaces are optimized for graphics-intensive workloads. Developed as an offshoot of DDR, GDDR prioritizes bandwidth over latency, with features like:

- Wide Bus Architectures: 256-bit or 512-bit interfaces.

- High Clock Speeds: GDDR6X achieves 21 Gb/s per pin.

- Thermal Resilience: Designed to withstand heat generated by GPUs.

GDDR6 and GDDR6X are widely used in gaming GPUs and workstations requiring real-time rendering.

5. LPDDR (Low Power DDR)

LPDDR interfaces cater to mobile devices, emphasizing energy savings. Key traits include:

- Voltage Scaling: LPDDR4X operates at 0.6V in low-power states.

- Partial Array Self-Refresh: Reduces active power consumption.

- Small Form Factors: Integrated into smartphones, tablets, and IoT devices.

LPDDR5X, the latest iteration, delivers 8533 MT/s while consuming 20% less power than LPDDR4.

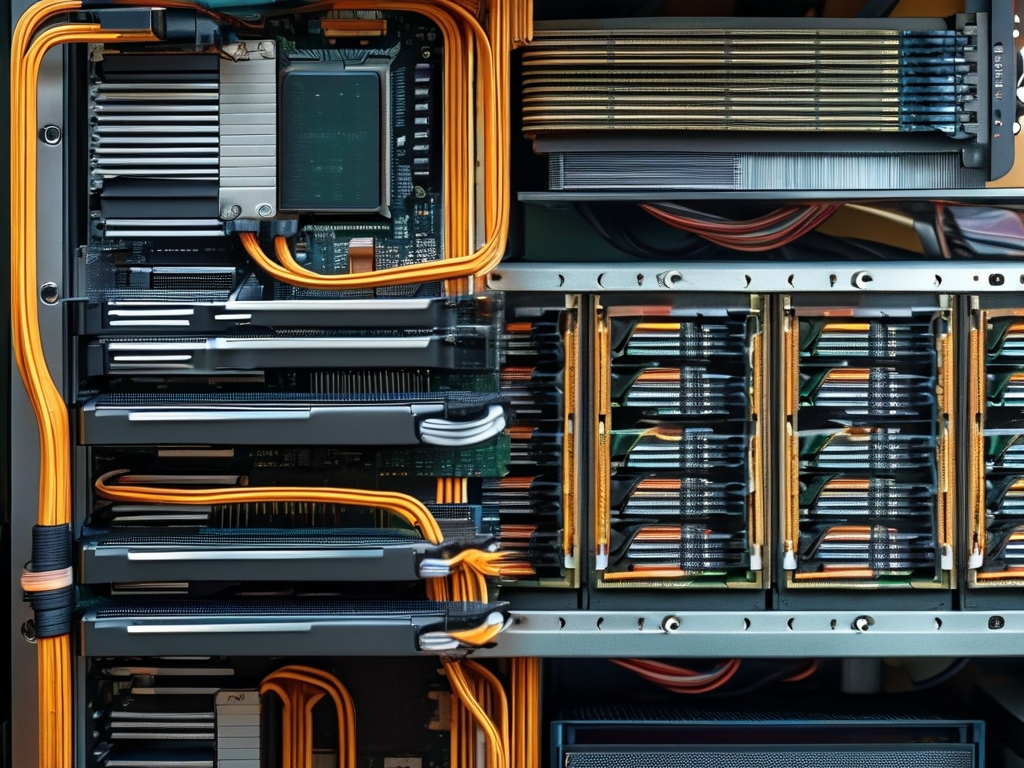

6. Memory Channel Architectures

Modern systems employ multi-channel configurations to maximize bandwidth:

- Dual-Channel: Uses two identical memory modules in parallel.

- Quad-Channel: Four modules, common in high-end desktops and servers.

- Octa-Channel: Eight channels, seen in enterprise-grade systems like AMD’s Threadripper.

These architectures reduce bottlenecks by distributing data across multiple pathways.

7. Emerging Interfaces

- CXL (Compute Express Link): A unified interface for memory, storage, and accelerators, enabling cache coherence between devices.

- OpenCAPI: An open-standard interface for high-speed data sharing between CPUs, GPUs, and FPGAs.

Computer memory interfaces have evolved from simple asynchronous designs to sophisticated, application-specific architectures. DDR remains dominant in mainstream computing, while HBM and GDDR address niche high-performance needs. Innovations like CXL and LPDDR5X highlight the industry’s focus on scalability, efficiency, and specialization. Understanding these interfaces is crucial for optimizing system performance across diverse workloads, from mobile apps to AI-driven analytics.