In the dawn of computing, memory technology laid the foundation for modern digital systems. Early computer memory modules were far removed from today’s sleek, high-capacity RAM sticks. Their journey from rudimentary magnetic cores to silicon-based designs reflects decades of innovation, engineering challenges, and breakthroughs that shaped computing as we know it.

The Magnetic Core Era

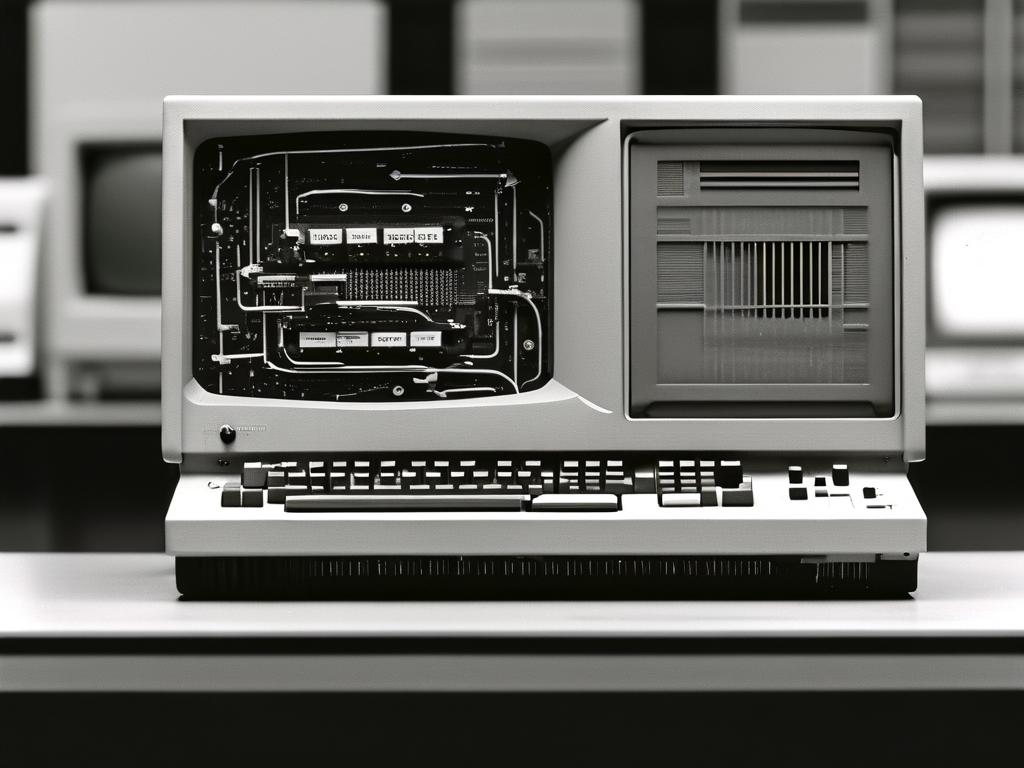

The 1950s and 1960s saw the dominance of magnetic core memory, a technology that stored data using tiny magnetized rings threaded by wires. Each core represented a single bit, with polarity indicating 0 or 1. Assembling these modules required meticulous manual labor, as workers wove wires through hundreds of cores. Despite its complexity, magnetic core memory was non-volatile—retaining data without power—and became a staple in systems like the IBM 1401 and Apollo Guidance Computer. However, its high production costs and limited scalability hinted at the need for alternatives.

Semiconductor Memory Emerges

The late 1960s marked a turning point with the advent of semiconductor memory. Fairchild Semiconductor and Intel pioneered early dynamic RAM (DRAM) chips, such as the Intel 1103, which replaced magnetic cores with silicon-based cells. DRAM stored data as electric charges in capacitors, offering higher density and faster access times. By the 1970s, static RAM (SRAM) also gained traction for its speed, though its higher cost restricted it to specialized applications like cache memory. These innovations drastically reduced memory module sizes while increasing reliability, setting the stage for personal computing.

Standardization and Modular Design

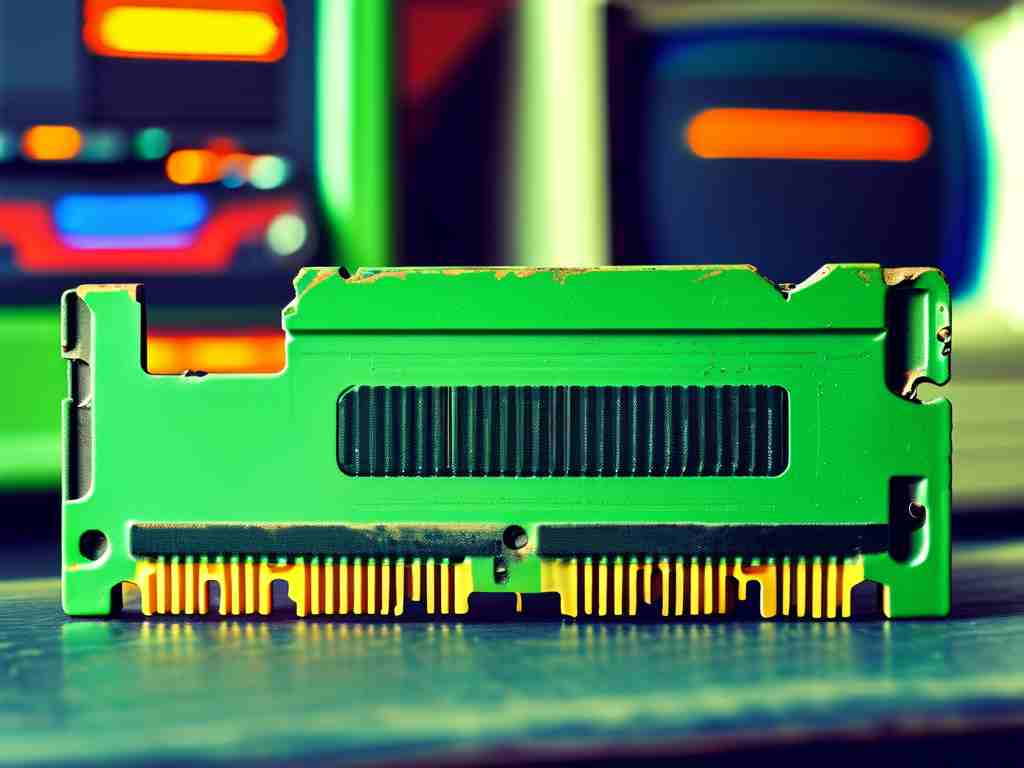

Early memory integration was chaotic, with manufacturers using proprietary designs. This changed in the 1980s with the of standardized modules. The Single In-Line Memory Module (SIMM), developed by Wang Laboratories, became a game-changer. SIMMs consolidated multiple DRAM chips onto a single circuit board, simplifying installation and upgrades. The 30-pin SIMM, for instance, became a staple in IBM-compatible PCs, offering capacities from 256 KB to 16 MB. Later, 72-pin SIMMs doubled data paths, supporting 32-bit systems like the Apple Macintosh.

Challenges and Innovations

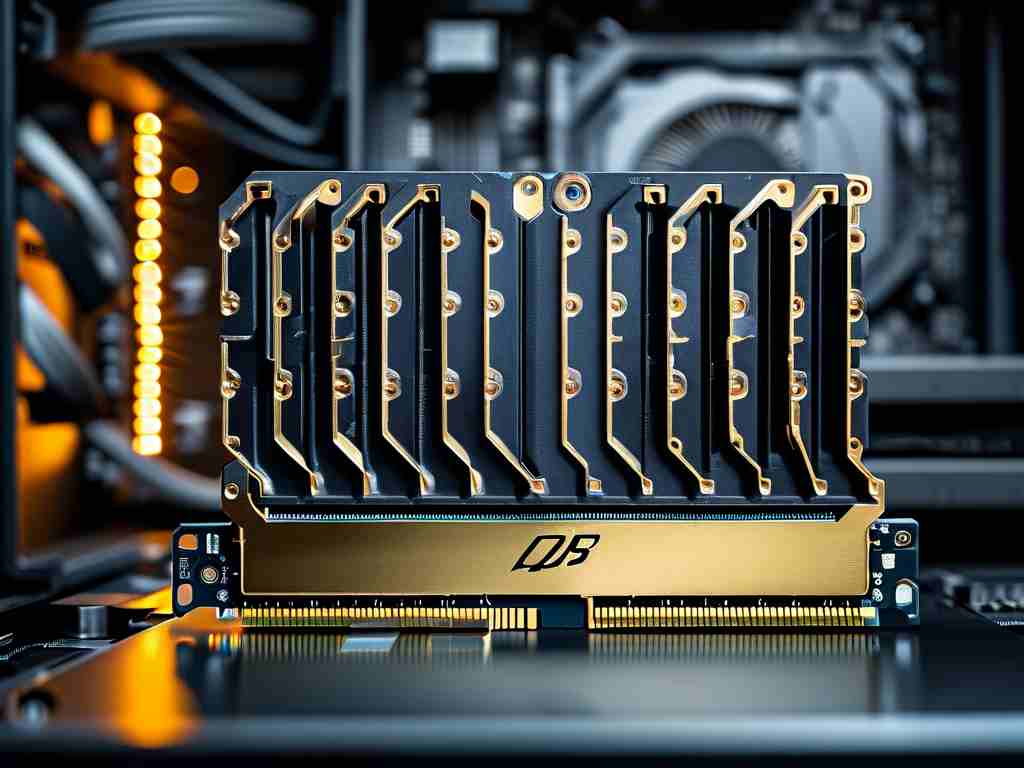

Early memory modules faced significant hurdles. Heat dissipation, signal interference, and manufacturing defects were common. Engineers responded with innovations such as parity checking—a method to detect data errors by adding an extra bit—and error-correcting code (ECC) memory for critical systems. The shift from through-hole to surface-mount technology (SMT) in the 1990s further miniaturized components, enabling higher-density modules like the 168-pin Dual In-Line Memory Module (DIMM).

Legacy and Modern Parallels

The evolution of early memory modules directly influenced today’s DDR SDRAM and NAND flash technologies. Concepts like modular design and error correction remain integral. Interestingly, vintage computing enthusiasts now repurpose legacy modules—such as 30-pin SIMMs—for retro hardware restoration, highlighting their enduring relevance.

In retrospect, early computer memory modules were more than hardware; they were catalysts for the digital revolution. Their transformation from hand-wired magnetic arrays to standardized silicon boards underscores the relentless pursuit of efficiency and accessibility in computing. As modern systems push toward terabyte-scale RAM and quantum storage, the lessons from these pioneering technologies continue to resonate.