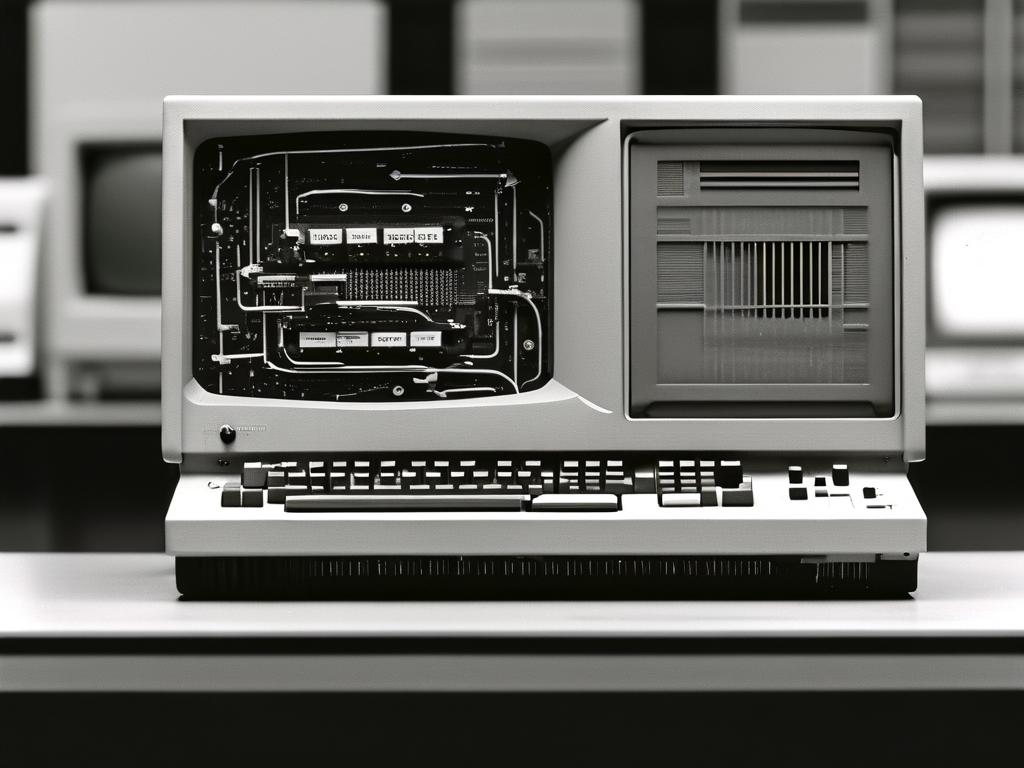

The dawn of computing in the 1940s and 1950s marked a revolutionary era with first-generation machines like ENIAC and UNIVAC I, which relied on primitive yet ingenious methods to handle memory. These early systems faced immense challenges in storing and accessing data, as they operated without the integrated circuits or solid-state memory familiar today. Instead, engineers devised innovative approaches to "calculate" memory, referring to the processes of addressing, retrieving, and manipulating stored information through mechanical and electronic means. This foundational work not only powered early computations but also laid the groundwork for modern computing architectures. Understanding how these pioneers managed memory reveals the ingenuity of an era defined by vacuum tubes and magnetic drums.

First-generation computers primarily used delay line memory, a technology where data was stored as acoustic or electromagnetic waves traveling through a medium like mercury or wire. For instance, the EDSAC computer, developed in 1949, employed mercury delay lines that could hold bits of information as pulses. To calculate memory access, the system relied on timing circuits that precisely measured the wave's travel time to locate specific addresses. Each memory "word" had a fixed position, and the computer executed instructions by sending signals to initiate and read these waves, effectively performing calculations to determine when and where data would reappear. This method was slow, with access times often measured in milliseconds, but it allowed for sequential data retrieval that supported basic arithmetic operations. Programmers had to manually account for these delays in their code, using assembly language to specify memory locations through binary addresses, such as in a simple load instruction: LDA 100 to fetch data from address 100. This hands-on approach required deep knowledge of the hardware, as any miscalculation in timing could lead to errors or system crashes.

Another common memory technology was magnetic drum storage, seen in machines like the IBM 650. Here, data was recorded on a rotating drum coated with magnetic material, with read/write heads positioned to access tracks as the drum spun. Calculating memory involved complex rotational latency calculations, where the computer had to predict the drum's position to minimize access time. Algorithms were embedded in firmware to compute optimal head movements, similar to modern disk scheduling but with far less sophistication. For example, a program might use a base address plus an offset to target a specific sector, requiring the CPU to perform arithmetic operations to derive the exact location before executing a read command. This process was resource-intensive, consuming valuable processing power that could have been used for actual computations, and it highlighted the trade-offs between memory capacity and speed. Typically, these systems offered only a few kilobytes of memory, forcing developers to write highly efficient, compact code. Debugging involved physical adjustments, such as repositioning heads or recalibrating timing mechanisms, which underscored the mechanical nature of early memory management.

Core memory emerged later in the first generation as a more reliable alternative, using tiny magnetic rings to store bits through polarization. Computers like the Whirlwind I incorporated this tech, where accessing memory required calculating current pulses through wire matrices to flip the cores' states. Each bit's address was determined by intersecting row and column wires, and the system used digital logic to compute the correct pathways for reading or writing data. This method improved speed and durability compared to delay lines, but it still demanded significant computational overhead for address translation. Programmers often wrote routines in machine code to handle these calculations, incorporating checks for errors like bit flips caused by electromagnetic interference. The limited memory size—often just 2-4 KB—meant that complex tasks required frequent data swapping with secondary storage like punch cards, adding layers of latency. Despite these constraints, these innovations enabled breakthroughs in scientific simulations and business applications, demonstrating how memory calculation was integral to processing efficiency.

The limitations of first-generation memory systems profoundly influenced computer design. Slow access times and high error rates prompted research into faster, more reliable technologies, paving the way for core memory's dominance and eventual transition to semiconductor RAM. Moreover, the hands-on experience with memory calculation fostered early concepts in programming, such as virtual memory and caching, which are now standard in modern systems. Reflecting on this era, it's clear that the "calculation" of memory wasn't just about arithmetic; it involved a holistic dance of hardware and software that defined computational progress. Today, as we enjoy gigabytes of instant-access memory, we owe a debt to these pioneering efforts that turned theoretical concepts into tangible tools for human advancement.