The integration of artificial intelligence (AI) into robotics has revolutionized industries ranging from healthcare to manufacturing. However, as AI-driven robots become more autonomous, ensuring their safety has emerged as a critical priority. This article explores the evolving landscape of AI robot safety technologies, addressing vulnerabilities, mitigation strategies, and the ethical frameworks needed to build trust in these systems.

The Growing Importance of AI Robot Safety

AI robots are no longer confined to controlled environments. They now operate in dynamic settings, interacting with humans and making real-time decisions. While this autonomy enhances efficiency, it introduces risks such as algorithmic biases, unintended behaviors, and cybersecurity threats. A 2023 report by the International Robotics Safety Board highlighted that over 40% of industrial incidents involving robots were linked to software flaws or communication failures. These statistics underscore the urgency of developing robust safety protocols.

Key Vulnerabilities in AI-Driven Robotics

One major challenge lies in the unpredictability of machine learning models. Unlike traditional programmed systems, AI robots learn from data, which can lead to unexpected outcomes. For instance, an AI-powered delivery robot might misinterpret a pedestrian’s movement, causing collisions. Additionally, adversarial attacks—where malicious actors manipulate input data—pose significant risks. Researchers at MIT recently demonstrated how subtle alterations to sensor data could trick an autonomous drone into deviating from its flight path.

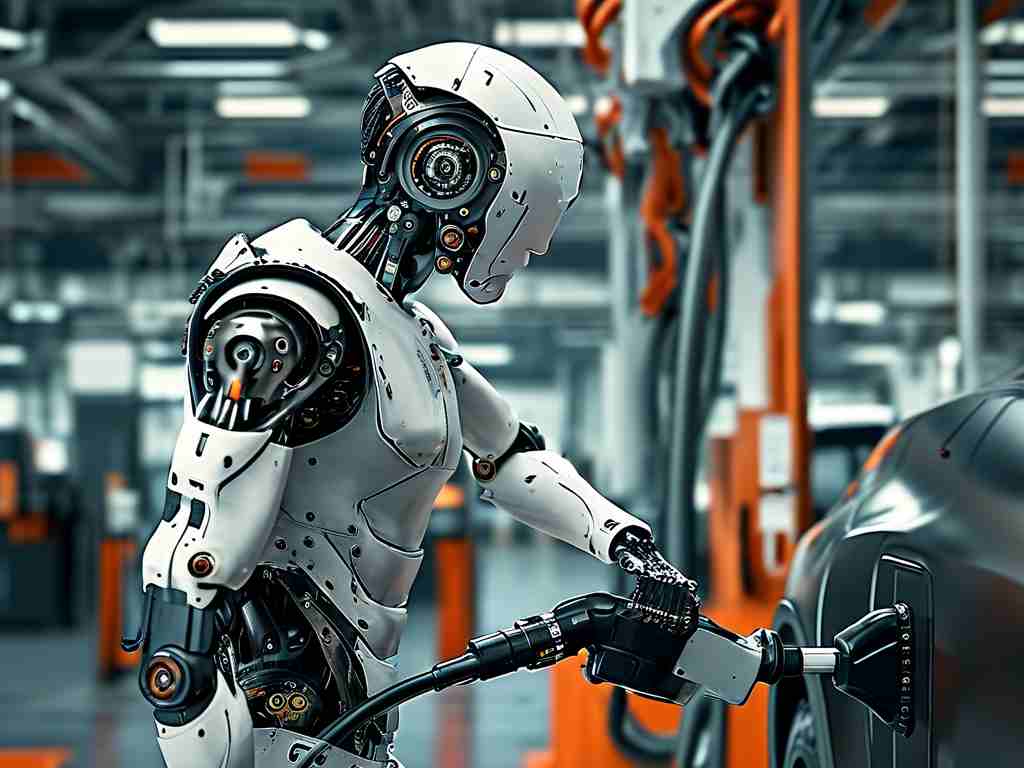

Cybersecurity is another critical concern. As robots connect to IoT networks, they become targets for hacking. A compromised industrial robot could leak sensitive data or even cause physical damage. In 2022, a automotive assembly plant in Germany halted production for three days after a ransomware attack disrupted its robotic workforce.

Innovations in Safety-Centric Technologies

To address these challenges, developers are leveraging advanced techniques. One approach involves "explainable AI" (XAI), which makes decision-making processes transparent. For example, XAI frameworks enable robots to log and justify their actions, simplifying audits and error detection. Companies like Boston Dynamics have integrated XAI into their quadruped robots, allowing operators to trace malfunctions to specific data inputs or algorithmic weights.

Real-time monitoring systems are also gaining traction. These tools use edge computing to analyze sensor data locally, reducing latency. A case study from a Singaporean hospital showed that implementing real-time monitoring reduced robotic surgical errors by 27% within six months. Furthermore, hybrid architectures combining rule-based systems with machine learning are being tested to create "fail-safe" mechanisms. If an AI model generates an unsafe command, the rule-based layer can override it.

Ethical and Regulatory Considerations

Safety isn’t solely a technical issue—it’s also an ethical one. Governments and organizations are collaborating to establish standards. The EU’s proposed Artificial Intelligence Act mandates rigorous risk assessments for high-impact AI systems, including robotics. Similarly, IEEE’s Global Initiative on Ethics of Autonomous Systems emphasizes human oversight and accountability.

Public perception plays a role too. A survey by the Pew Research Center revealed that 58% of respondents distrust AI robots in caregiving roles due to safety concerns. Building trust requires transparency, such as publishing safety certifications or allowing third-party audits.

The Road Ahead: Collaborative Safety Ecosystems

Future advancements will rely on interdisciplinary collaboration. Universities, tech firms, and policymakers must work together to share data and best practices. For instance, OpenAI’s partnership with robotics startups has accelerated the adoption of safety-focused reinforcement learning models.

Investments in simulation environments are also crucial. Platforms like NVIDIA’s Isaac Sim allow developers to test AI robots in virtual scenarios, identifying risks before deployment. One simulation study prevented a warehouse robot from misclassifying obstacles by training it on 10,000 synthetic edge cases.

In , AI robot safety is a multifaceted endeavor requiring technological innovation, ethical foresight, and global cooperation. As these machines become ubiquitous, prioritizing safety will ensure they serve as reliable partners rather than unpredictable liabilities.