Embedded AI Development: Challenges, Strategies, and Future Prospects

The integration of artificial intelligence (AI) into embedded systems has revolutionized industries ranging from healthcare to automotive engineering. Embedded AI development refers to the process of deploying machine learning (ML) models and algorithms on resource-constrained hardware devices, enabling real-time decision-making at the "edge" rather than relying on cloud-based solutions. While this technology promises unprecedented efficiency and innovation, it also presents unique challenges that demand careful planning, optimization, and interdisciplinary collaboration.

The Rise of Embedded AI

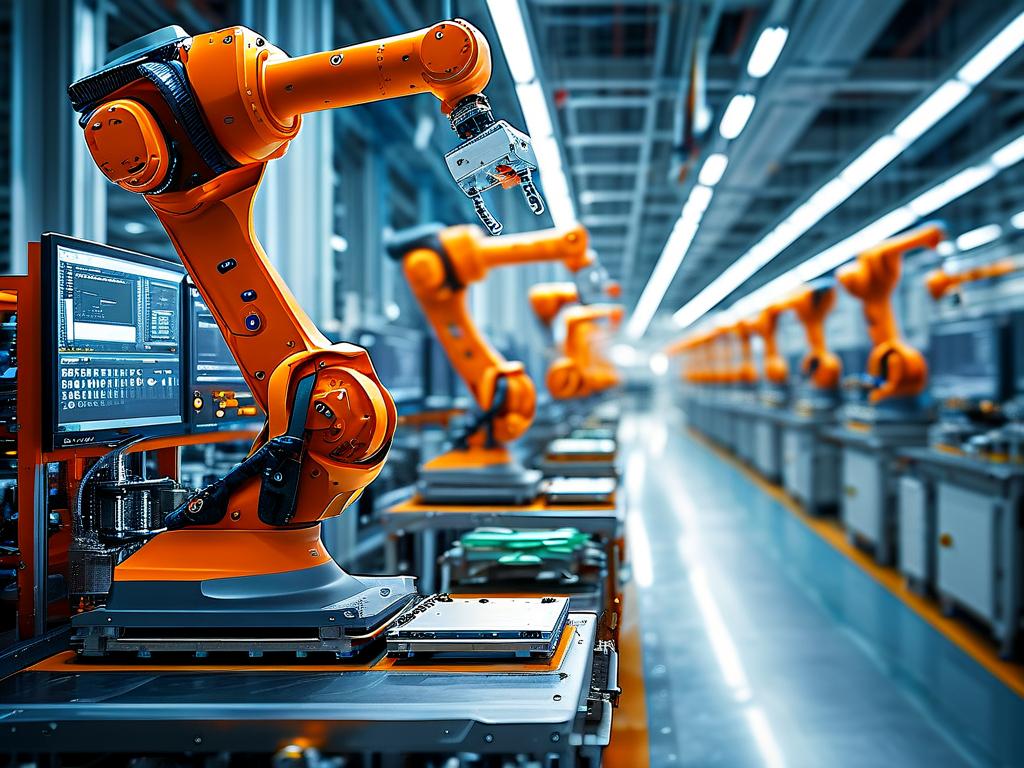

Embedded AI has gained momentum due to the growing need for low-latency, privacy-preserving, and energy-efficient computing. Traditional cloud-based AI systems often struggle with delays caused by data transmission, security vulnerabilities, and high operational costs. In contrast, embedded AI processes data locally on devices like microcontrollers, sensors, or edge servers, reducing reliance on external networks. Applications include autonomous drones, smart home devices, industrial IoT sensors, and wearable health monitors. For example, a self-driving car’s collision avoidance system must analyze camera feeds in milliseconds—a task impossible for cloud-dependent architectures.

Key Challenges in Embedded AI Development

-

Hardware Limitations:

Embedded systems typically operate under strict constraints, including limited processing power, memory, and energy budgets. Deploying complex neural networks on devices with kilobytes of RAM or megahertz-level clock speeds requires drastic model optimization. Developers must balance accuracy with computational efficiency, often sacrificing model size or complexity. -

Algorithm Optimization:

Not all AI algorithms are suitable for embedded deployment. Deep learning models like convolutional neural networks (CNNs) may require pruning, quantization, or knowledge distillation to reduce their footprint. For instance, quantization converts 32-bit floating-point weights to 8-bit integers, shrinking model size by 75% but risking accuracy loss. -

Power Consumption:

Energy efficiency is critical for battery-powered devices. Running AI workloads continuously can drain power rapidly. Techniques such as duty cycling (activating AI modules only when needed) or leveraging hardware accelerators (e.g., NPUs) help mitigate this issue. -

Software-Hardware Co-Design:

Achieving optimal performance requires tight integration between software and hardware. Developers must understand the target platform’s architecture to exploit parallelism, memory hierarchies, or specialized instructions. Frameworks like TensorFlow Lite for Microcontrollers or PyTorch Mobile simplify deployment but still demand platform-specific tuning. -

Real-Time Performance:

Many embedded AI applications operate in time-sensitive environments. A delayed response in medical devices or robotic systems could have catastrophic consequences. Ensuring deterministic execution times while managing interrupts and multitasking remains a persistent hurdle.

Strategies for Success

To overcome these challenges, developers adopt a combination of technical and methodological approaches:

-

Model Compression:

Techniques like pruning (removing redundant neurons), quantization (reducing numerical precision), and architecture search (designing compact networks) are essential. TinyML, a subfield focused on ultra-low-power devices, has popularized models like MobileNet or SqueezeNet for embedded use. -

Hardware Acceleration:

Modern microcontrollers increasingly include AI-specific cores. For example, ARM’s Ethos-U55 NPU accelerates ML inference on edge devices. FPGAs and ASICs also offer customizable solutions for high-performance workloads. -

Edge-Cloud Hybrid Systems:

Some applications split tasks between local and cloud processing. A smart camera might run basic object detection on-device while offloading complex scene analysis to the cloud, balancing speed and capability. -

Energy-Aware Development:

Tools like power profilers and dynamic voltage scaling help optimize energy use. Developers also prioritize low-power states and wake-on-event triggers to extend battery life. -

Robust Testing:

Embedded AI systems must be validated under real-world conditions, including temperature extremes, electromagnetic interference, and fluctuating power supplies. Simulation tools and hardware-in-the-loop (HIL) testing ensure reliability.

Future Prospects

The future of embedded AI is shaped by advancements in hardware, algorithms, and cross-industry collaboration:

-

Specialized Chips:

Silicon vendors are designing chips tailored for AI at the edge. Google’s Edge TPU and NVIDIA’s Jetson series exemplify this trend, offering high throughput with minimal power draw. -

Federated Learning:

This privacy-focused technique allows devices to collaboratively train models without sharing raw data. For example, smartphones could improve a keyboard’s autocorrect model using local typing patterns, aggregating updates securely. -

TinyML Democratization:

Open-source initiatives like the TinyML Foundation and platforms like Edge Impulse are making embedded AI accessible to non-experts. Low-code tools enable rapid prototyping for startups and researchers.

-

Ethical and Regulatory Considerations:

As embedded AI permeates critical systems, questions about accountability, bias, and safety arise. Standards like ISO 26262 (for automotive systems) and GDPR (for data privacy) will influence development practices. -

Sustainability:

Energy-efficient AI aligns with global sustainability goals. Researchers are exploring bio-inspired algorithms and spiking neural networks that mimic the brain’s efficiency, potentially reducing carbon footprints.

Embedded AI development sits at the intersection of innovation and constraint. While challenges like hardware limitations and real-time demands persist, advancements in optimization techniques and hardware design are paving the way for smarter, faster, and greener systems. As industries increasingly adopt edge computing, developers must prioritize interdisciplinary knowledge—combining expertise in ML, embedded systems, and domain-specific requirements—to unlock the full potential of this transformative technology. The journey from prototype to production may be arduous, but the rewards—autonomous systems, personalized healthcare, and sustainable infrastructure—are well worth the effort.