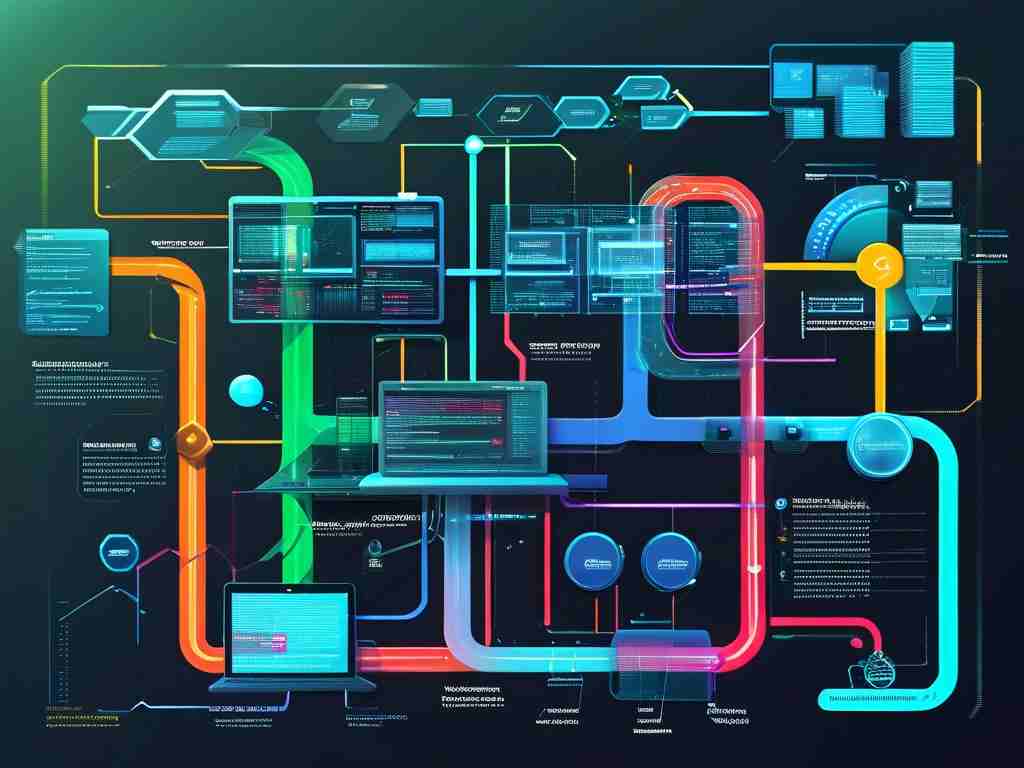

In today’s interconnected digital landscape, load balancing has become a cornerstone of modern network architecture, ensuring optimal resource utilization, minimizing latency, and enhancing system reliability. As applications grow more complex and user demands escalate, load balancing technologies have evolved to address diverse challenges. This article explores the current load balancing techniques, their mechanisms, and real-world applications.

1. Hardware-Based Load Balancers

Traditional hardware load balancers, such as those from F5 Networks or Cisco, remain prevalent in enterprise environments. These dedicated devices use specialized processors to distribute traffic across servers based on predefined algorithms like Round Robin, Least Connections, or IP Hash. Their strengths lie in high performance, low latency, and robust security features like SSL offloading. However, their inflexibility and high cost make them less suitable for dynamic cloud-native environments.

2. Software-Based Load Balancers

Software solutions like NGINX, HAProxy, and Traefik have gained traction due to their flexibility and scalability. These tools run on standard servers or virtual machines, leveraging algorithms such as Weighted Round Robin or Least Response Time. NGINX, for instance, excels in HTTP/HTTPS traffic management, while HAProxy supports advanced TCP-layer routing. Their open-source nature and integration with DevOps pipelines make them ideal for microservices and containerized architectures.

3. Cloud-Native Load Balancing

Major cloud providers offer built-in load balancing services tailored to their ecosystems:

- AWS Elastic Load Balancer (ELB): Supports Application Load Balancers (ALB) for HTTP/HTTPS and Network Load Balancers (NLB) for ultra-low latency TCP/UDP traffic.

- Google Cloud Load Balancing: Features global anycast IPs for distributing traffic across regions.

- Azure Load Balancer: Integrates seamlessly with Azure services and offers layer 4 (transport layer) distribution.

These platforms automate scaling, health checks, and SSL termination, reducing operational overhead for cloud-native applications.

4. DNS-Based Load Balancing

DNS load balancing distributes requests by resolving domain names to multiple IP addresses. While simple to implement, it lacks granular control over traffic distribution and may suffer from caching issues. Advanced DNS services like Amazon Route 53 or Cloudflare mitigate these limitations with latency-based routing and failover capabilities.

5. Global Server Load Balancing (GSLB)

GSLB extends load balancing to a global scale, directing users to the nearest or least congested data center. Tools like F5 BIG-IP Global Traffic Manager or Citrix ADC use geolocation, latency metrics, and server health data to optimize traffic routing. This is critical for multinational enterprises and content delivery networks (CDNs) aiming to reduce latency.

6. Application-Layer Load Balancing

Modern applications often require context-aware routing. Layer 7 (application layer) load balancers analyze HTTP headers, cookies, or URLs to make intelligent decisions. For example, an e-commerce platform might route mobile users to lightweight servers while directing API traffic to high-performance nodes. Kubernetes Ingress Controllers (e.g., Istio Gateway) exemplify this approach in container orchestration environments.

7. Dynamic Load Balancing with AI/ML

Emerging technologies integrate machine learning to predict traffic patterns and adjust routing in real time. Solutions like Avi Networks (now part of VMware) analyze historical data to anticipate spikes, preventing bottlenecks. Such systems also auto-tune algorithms, improving efficiency in unpredictable workloads like streaming services or IoT networks.

8. Peer-to-Peer (P2P) Load Balancing

In decentralized systems, P2P load balancing distributes tasks across nodes without a central coordinator. Blockchain networks and edge computing frameworks leverage this method to enhance scalability and fault tolerance. While complex to manage, it eliminates single points of failure.

Challenges and Future Trends

Despite advancements, load balancing faces challenges like handling stateful applications, securing east-west traffic in microservices, and adapting to 5G/edge computing demands. Future innovations may focus on intent-based networking, where systems autonomously align traffic routing with business objectives.

From hardware appliances to AI-driven platforms, load balancing technologies continue to evolve, driven by the need for speed, reliability, and scalability. Organizations must evaluate their infrastructure requirements, workload types, and budget constraints to choose the optimal solution. As hybrid and multi-cloud architectures dominate, adaptive, intelligent load balancing will remain pivotal in delivering seamless digital experiences.