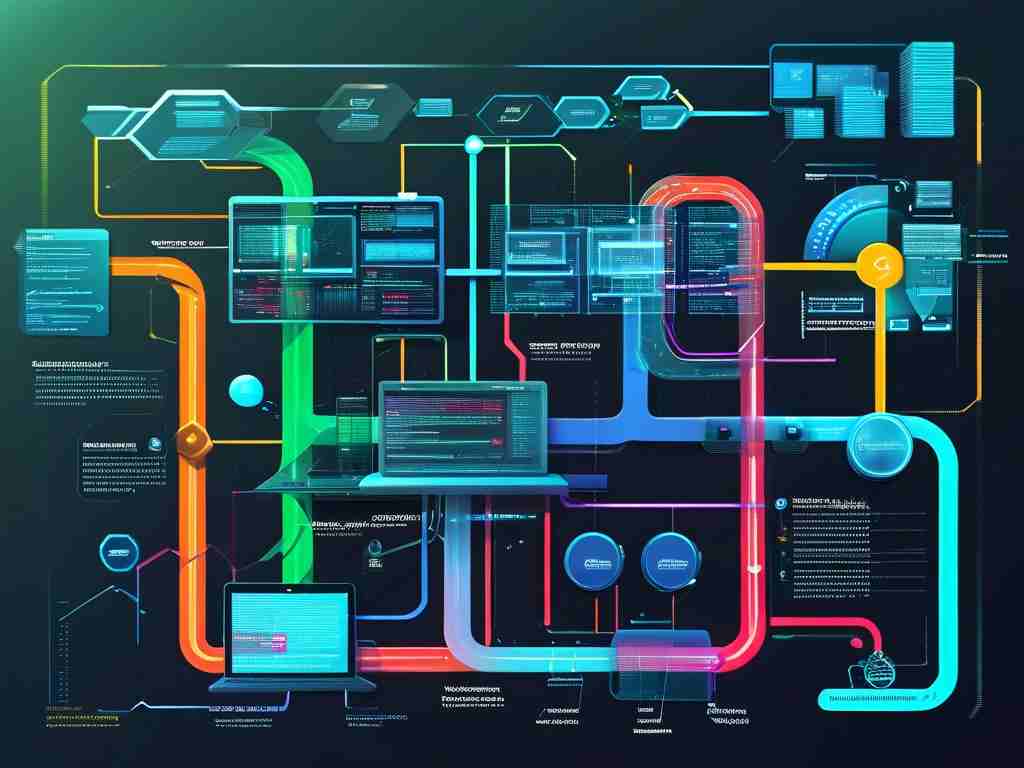

In modern distributed computing environments, server load balancing (SLB) has become an indispensable component for ensuring high availability and optimal resource utilization. This technology distributes network traffic across multiple servers to prevent overload on any single node, thereby enhancing system stability and responsiveness. Let's explore how SLB achieves these objectives through its architectural design and operational mechanisms.

At its foundation, SLB operates by acting as an intermediary between client requests and backend servers. When a user initiates a request, it first reaches the load balancer rather than connecting directly to a specific server. This intermediary layer employs intelligent algorithms to determine the most suitable server for handling each request. The decision-making process considers multiple factors, including real-time server health metrics, current connection counts, and predefined distribution policies.

One critical aspect of SLB technology lies in its health monitoring capabilities. Modern load balancers continuously probe servers using protocols like HTTP/HTTPS GET requests or ICMP pings. If a server fails to respond within configured thresholds, the load balancer automatically reroutes traffic to healthy nodes. This failover mechanism ensures service continuity even during hardware failures or maintenance windows. For instance, in cloud environments, SLB systems often integrate with auto-scaling groups to dynamically adjust server pools based on traffic patterns.

The algorithms governing traffic distribution vary depending on implementation requirements. Common methods include:

- Round Robin - Cyclically assigns requests to servers in sequence

- Weighted Distribution - Prioritizes servers with higher capacity allocations

- Least Connections - Directs traffic to the least busy server

- Geolocation Routing - Optimizes latency by selecting nearest nodes

A practical implementation might combine these strategies. Consider an e-commerce platform during peak sales periods:

# Simplified example of weighted load balancing

servers = [

{"ip": "192.168.1.10", "weight": 5, "active_conn": 12},

{"ip": "192.168.1.11", "weight": 3, "active_conn": 8},

{"ip": "192.168.1.12", "weight": 2, "active_conn": 15}

]

def select_server():

total_weight = sum(srv["weight"]/(srv["active_conn"]+1) for srv in servers)

rand = random.uniform(0, total_weight)

current = 0

for srv in servers:

current += srv["weight"]/(srv["active_conn"]+1)

if rand <= current:

return srv["ip"]

Beyond basic traffic management, advanced SLB implementations incorporate security features. Many modern solutions integrate Web Application Firewalls (WAF) to filter malicious requests and mitigate DDoS attacks before they reach application servers. This dual functionality of optimizing performance while enhancing security makes SLB a cornerstone of contemporary network architecture.

In microservices environments, SLB plays a particularly vital role. As containerized applications scale horizontally across clusters, load balancers manage service discovery and routing between ephemeral instances. Kubernetes' native Ingress controller exemplifies this pattern, dynamically updating routing rules as pods are created or terminated.

However, implementing SLB introduces design considerations. Administrators must carefully configure session persistence mechanisms when dealing with stateful applications. Techniques like cookie injection or source IP affinity ensure subsequent requests from the same client reach the same backend server, maintaining session integrity.

The evolution of SLB continues with emerging technologies. Software-defined networking (SDN) enables more granular traffic control, while machine learning algorithms are being tested to predict and preemptively balance anticipated load spikes. These advancements promise to further reduce latency and improve resource allocation efficiency.

From content delivery networks to financial transaction systems, SLB's adaptive architecture addresses the growing demands of digital services. By understanding its operational principles, engineers can better design resilient infrastructures capable of scaling with business needs while maintaining seamless user experiences.