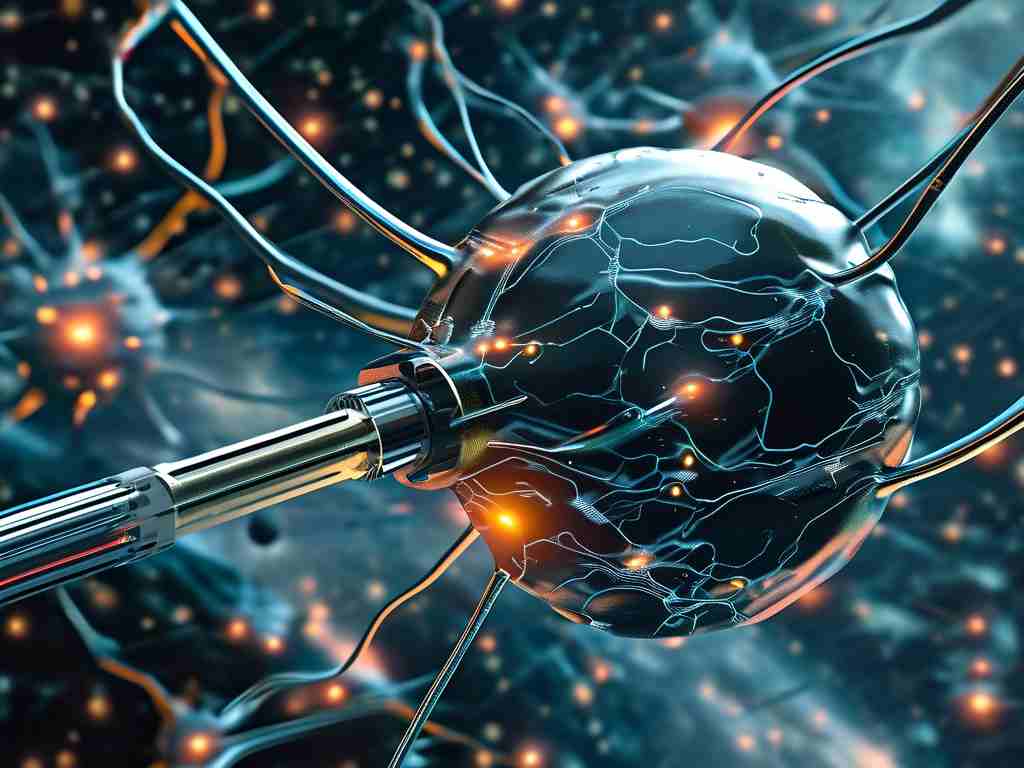

Neural network probes represent a cutting-edge approach to demystifying the inner workings of artificial intelligence systems. As deep learning models grow increasingly complex, their decision-making processes often resemble black boxes, making it challenging for developers and users to trust or debug them. Probes address this by serving as specialized tools that attach to neural network layers, capturing real-time data like activations and gradients. This allows for detailed analysis without altering the model's core functionality, thereby enhancing interpretability and accountability in AI applications.

The fundamental mechanism behind neural network probes involves inserting lightweight monitoring components during training or inference. For instance, in convolutional neural networks used for image recognition, probes can track feature maps to reveal how the model identifies edges or textures. Similarly, in natural language processing models, they might monitor attention weights to uncover biases in text generation. This non-intrusive design ensures minimal performance overhead while providing invaluable insights. A common implementation uses hooks in frameworks like PyTorch, as shown in this brief code snippet:

import torch

import torch.nn as nn

class ProbeLayer(nn.Module):

def __init__(self):

super(ProbeLayer, self).__init__()

self.activations = []

def forward(self, x):

self.activations.append(x.detach().cpu().numpy())

return x

# Example usage in a neural network

model = nn.Sequential(

nn.Linear(10, 20),

ProbeLayer(), # Probe attached here

nn.ReLU(),

nn.Linear(20, 2)

)

Applications of neural network probes span diverse domains, driving innovation in fields such as healthcare diagnostics and autonomous vehicles. In medical imaging, probes help validate AI predictions by highlighting regions of interest in scans, reducing diagnostic errors. For self-driving cars, they detect anomalies in perception systems, improving safety by identifying when models might misinterpret road signs. Moreover, probes play a crucial role in adversarial defense, where they spot subtle input manipulations that could fool models, thereby fortifying cybersecurity. The rise of regulatory demands for explainable AI further amplifies their importance, as probes offer a practical path to compliance without sacrificing model accuracy.

Despite their benefits, neural network probes face significant challenges that researchers are actively tackling. One major hurdle is computational efficiency; adding probes can increase inference latency, especially in resource-constrained environments like edge devices. This necessitates optimization techniques, such as pruning redundant probes or using approximate monitoring methods. Another issue involves the risk of misinterpreted data, where noisy or incomplete probe outputs lead to false s about model behavior. To mitigate this, hybrid approaches combine probes with other interpretability tools like SHAP values, creating a more robust analysis framework. Additionally, ethical considerations arise, as probes could potentially expose sensitive training data if not secured properly, highlighting the need for privacy-preserving designs.

Looking ahead, the evolution of neural network probes promises exciting advancements. Integration with emerging technologies like federated learning could enable distributed probing across decentralized systems, enhancing scalability. Innovations in probe design, such as adaptive probes that self-configure based on model complexity, might reduce manual intervention. Ultimately, as AI systems become more pervasive, probes will be indispensable for building trustworthy, transparent models that align with human values. By fostering a deeper understanding of neural networks, these tools empower developers to innovate responsibly, ensuring AI serves society effectively and ethically.