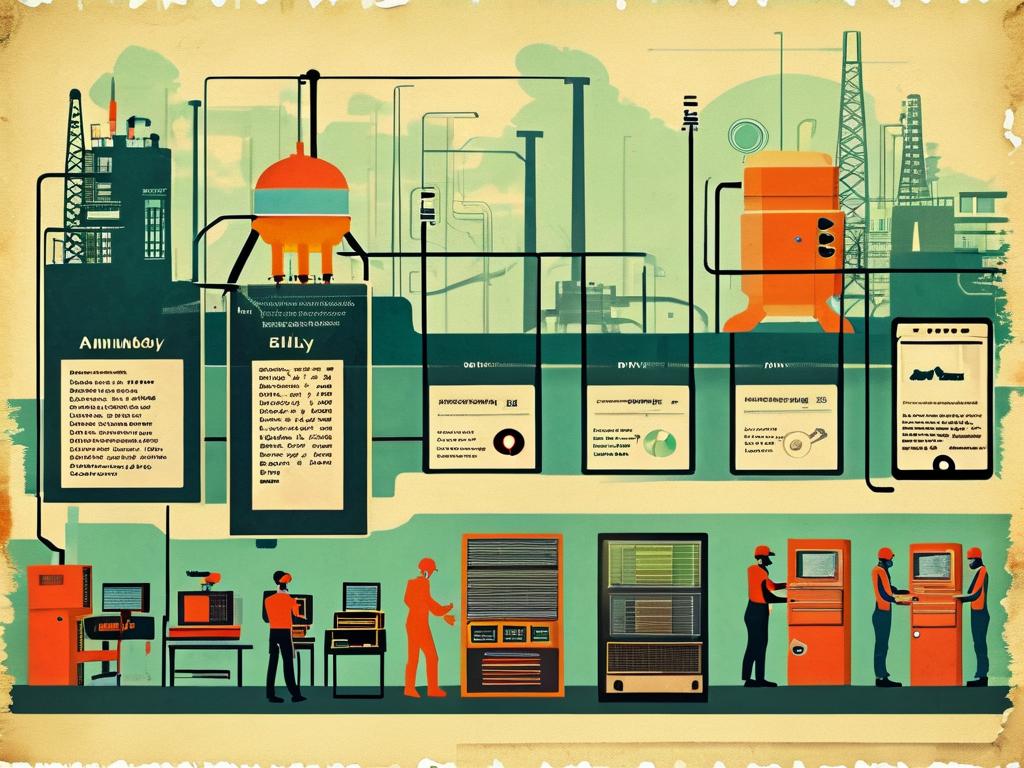

The evolution of automated deployment represents a fascinating journey through the annals of technology, transforming how software reaches end-users from cumbersome manual efforts to sleek, efficient systems. This historical process began in the early days of computing, when developers relied entirely on hands-on methods. Imagine the 1960s and 1970s, where deploying applications involved physical steps like loading punch cards or tapes onto mainframes. Teams spent hours debugging and transferring code manually, leading to frequent errors and delays. It was a time-consuming era, with no automation in sight, as the focus was primarily on building basic programs rather than streamlining deployment.

As computing advanced into the 1980s, the first glimmers of automation emerged with the rise of scripting languages. Developers started using simple shell scripts on Unix systems to automate repetitive tasks. For instance, a Bash script could handle file transfers or basic compilation, reducing human intervention. This shift marked the initial step toward efficiency, though it remained rudimentary. Scripts were often custom-built and prone to failures if environments changed, highlighting the need for more robust solutions. The era also saw the advent of early build tools like Make, which automated compilation processes but still required significant manual oversight for deployment phases. This period laid the groundwork by introducing the concept that machines could handle routine work, freeing developers for innovation.

The 1990s brought a surge in complexity with the internet boom, demanding faster deployment cycles. Tools like Apache Ant emerged, allowing for more structured automation in Java environments. Ant scripts could compile code, run tests, and package applications, yet deployment often involved manual steps to servers. This decade witnessed the birth of continuous integration (CI) principles, pioneered by practices at companies like Microsoft. CI tools such as CruiseControl automated building and testing code upon each commit, but full deployment automation was still elusive. The challenges included inconsistent environments and lack of standardization, which often led to "it works on my machine" issues. Despite these hurdles, the momentum grew as businesses realized automation could cut costs and accelerate releases.

Entering the 2000s, the landscape shifted dramatically with the rise of continuous deployment and DevOps culture. Jenkins, an open-source automation server, became a game-changer by enabling end-to-end pipelines. Developers could now define workflows that automated building, testing, and deploying code to staging or production environments. This era also saw the proliferation of version control systems like Git, which integrated seamlessly with CI tools to ensure code changes triggered automated processes. Cloud computing platforms such as Amazon Web Services (AWS) further revolutionized deployment by providing scalable infrastructure that could be managed via APIs. For example, using a simple script, teams could spin up virtual machines and deploy applications in minutes. This decade emphasized collaboration between development and operations teams, fostering the DevOps movement that prioritized automation as a core tenet for reliability and speed.

In recent years, automation has reached unprecedented sophistication with containerization and infrastructure-as-code (IaC). Technologies like Docker allow applications to be packaged with dependencies, ensuring consistency across environments. Orchestration tools such as Kubernetes automate deployment scaling and management, handling thousands of containers effortlessly. IaC frameworks like Terraform or Ansible enable defining infrastructure through code, making deployments repeatable and version-controlled. A snippet illustrates this: terraform apply automates provisioning cloud resources, eliminating manual setup. Modern CI/CD platforms like GitLab CI or GitHub Actions integrate these elements, offering pipelines that deploy code with minimal human input. The current trend leans toward cloud-native architectures, where microservices and serverless computing automate deployments in real-time, driven by AI and machine learning for predictive scaling.

Reflecting on this journey, automated deployment's history showcases a relentless drive toward efficiency, reducing errors from days to seconds. Future horizons point to AI-driven automation and edge computing, but the core lesson remains: automation empowers innovation by handling the mundane. As we embrace these advances, the historical evolution reminds us that each step built on predecessors, turning deployment from a bottleneck into a strategic asset.