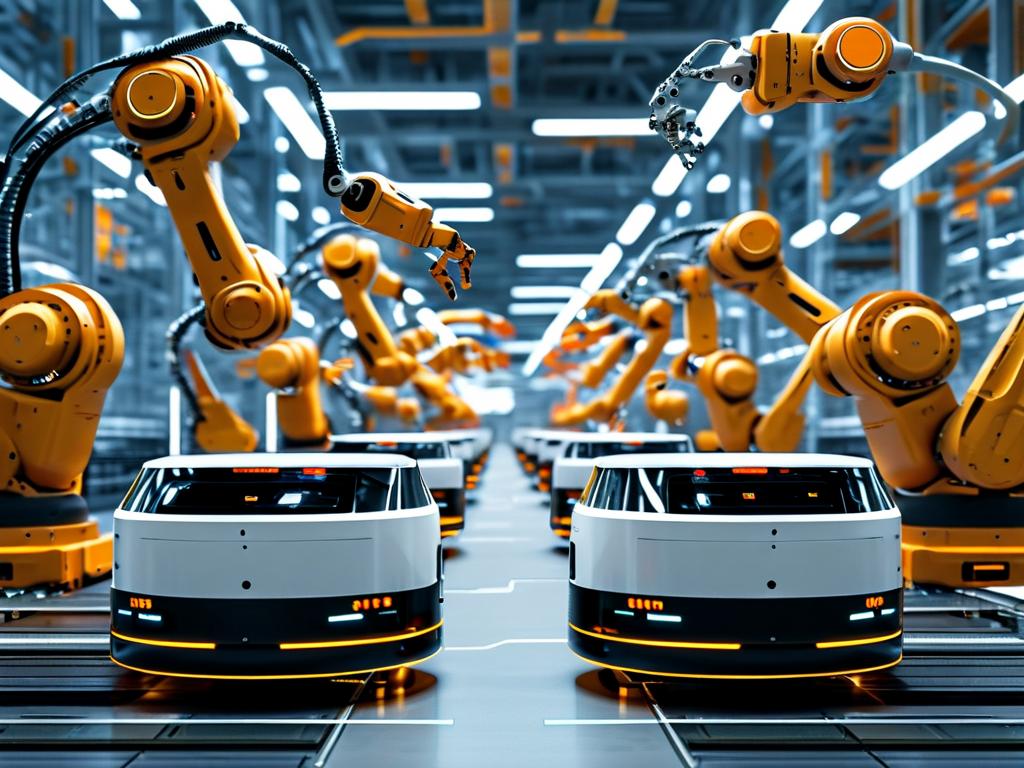

Autonomous Mobile Robots (AMRs) represent a transformative leap in automation, combining advanced hardware and software to navigate dynamic environments without human intervention. Unlike traditional automated guided vehicles (AGVs), which rely on fixed paths or magnetic strips, AMRs leverage real-time data processing, sensor fusion, and adaptive algorithms to operate intelligently. This article explores the underlying technologies that empower AMRs, focusing on their navigation systems, decision-making frameworks, and integration capabilities.

At the heart of AMR functionality lies simultaneous localization and mapping (SLAM). This technology enables robots to construct a map of their surroundings while simultaneously tracking their position within it. SLAM algorithms process inputs from LiDAR (Light Detection and Ranging), cameras, ultrasonic sensors, and inertial measurement units (IMUs) to create a coherent spatial understanding. For instance, LiDAR generates precise 3D point clouds to detect obstacles, while RGB-D cameras add depth perception for object recognition. By fusing these data streams, AMRs dynamically adjust their paths in response to moving objects like humans or forklifts.

Path planning and collision avoidance are critical for AMR efficiency. Once a destination is set, the robot calculates optimal routes using graph-based algorithms such as A* or Dijkstra. However, static planning alone is insufficient for unpredictable environments. Modern AMRs employ reactive algorithms like dynamic window approach (DWA) or artificial potential fields to make real-time adjustments. For example, if a worker steps into an AMR’s path, the robot instantly recalculates its trajectory, balancing speed and safety. These decisions are executed through proportional-integral-derivative (PID) controllers or model predictive control (MPC) systems, ensuring smooth motion even during abrupt maneuvers.

Another cornerstone of AMR technology is edge computing and connectivity. AMRs often operate as part of a larger fleet managed by a central control system. By leveraging 5G or Wi-Fi 6, robots share situational data—such as traffic bottlenecks or task completions—enabling coordinated workflows. Edge computing reduces latency by processing data locally rather than relying solely on cloud servers. This is crucial for time-sensitive tasks, such as rerouting multiple AMRs in a warehouse during peak hours. Additionally, over-the-air (OTA) updates allow operators to refine navigation logic or add new features without physical interventions.

Energy management also plays a pivotal role in AMR design. Most AMRs use lithium-ion batteries due to their high energy density and recharge efficiency. Power-saving techniques, such as regenerative braking or sleep modes during idle periods, extend operational uptime. Some advanced models even autonomously dock at charging stations when battery levels dip below a threshold, ensuring uninterrupted operations in 24/7 facilities.

Despite their sophistication, AMRs face challenges. Sensor occlusion in cluttered spaces can create blind spots, while fluctuating wireless signals may disrupt fleet coordination. To address these issues, researchers are exploring hybrid localization methods that combine visual fiducial markers with probabilistic filters. Furthermore, advancements in machine learning enable AMRs to predict human movement patterns, reducing collision risks in shared spaces.

In , AMRs rely on a synergy of cutting-edge technologies—from SLAM and adaptive pathfinding to robust connectivity and energy systems. As industries adopt these robots for logistics, healthcare, and manufacturing, continued innovation in AI and sensor tech will further enhance their autonomy and reliability. By understanding these technical principles, businesses can better integrate AMRs into their operations, unlocking new levels of productivity and flexibility.