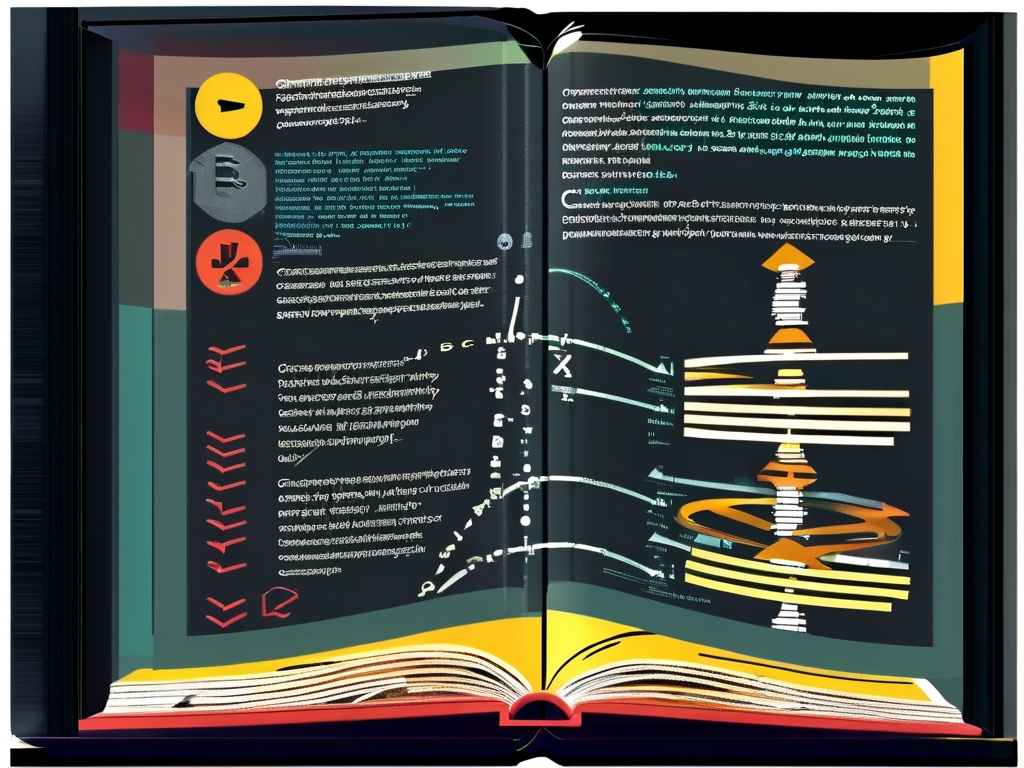

Mastering compiler principles requires a structured approach that balances theoretical understanding with practical implementation. Unlike casual technical reading, engaging with this specialized field demands targeted methods to decode complex concepts like lexical analysis, syntax trees, and code optimization. Below, we explore proven strategies to enhance comprehension and retention while studying compiler design.

1. Layered Conceptual Breakdown

Compiler theory operates at multiple abstraction levels. Begin by isolating core components: lexical analysis (scanning), syntax analysis (parsing), semantic analysis, intermediate code generation, optimization, and code generation. Create mental maps using analogies – for instance, comparing a compiler’s phases to a factory assembly line where raw code undergoes sequential transformations. Tools like syntax diagram sketches or finite automata flowcharts help visualize abstract processes.

Supplement textbooks with runtime observations. Modify open-source compiler codebases (e.g., TinyCC or LuaJIT) to print intermediate representation outputs. Watching how a = b + c*2 evolves from abstract syntax trees to three-address code reinforces theoretical frameworks through concrete examples.

2. Project-Driven Learning

Implementing a miniature compiler demystifies arcane textbook diagrams. Start with a subset of a language like BASIC or a custom arithmetic expression parser. Use parser generators (Flex/Bison) initially to avoid getting bogged down in low-level details. For example:

%token NUMBER

%left '+' '-'

%left '*' '/'

%%

expr: expr '+' expr { $$ = $1 + $3; }

| NUMBER { $$ = $1; }

;

This snippet reveals how grammar rules translate to computational logic. Gradually replace toolchain dependencies with handwritten code to deepen understanding of lookahead buffers and parse table construction.

3. Pattern Recognition in Code Optimization

Study real-world compiler outputs using Godbolt Compiler Explorer. Compare how different compilers (GCC, Clang) transform C++ code into assembly under varying optimization flags. Notice recurring techniques like loop unrolling or dead code elimination. Document patterns in a lab notebook – this cultivates the analytical mindset needed to anticipate compiler behavior when writing performance-critical code.

4. Contextualized Mathematics

Revisit discrete mathematics through a compiler lens. Apply set theory to token classification, graph theory to control flow analysis, and Boolean algebra to peephole optimization rules. For instance, construct NFA/DFA diagrams for regular expressions using matrix operations. This bridges the gap between abstract math and engineering applications.

5. Collaborative Debugging Sessions

Join compiler-focused coding communities to troubleshoot implementation errors. Explaining why a shift-reduce parser conflicts on certain grammars often reveals gaps in theoretical knowledge. Platforms like Stack Overflow’s compiler design tag or GitHub issue threads provide exposure to edge cases rarely covered in textbooks.

6. Historical Perspective Study

Analyze seminal papers like Backus’s Fortran compiler documentation or Allen’s optimization research. Tracking how solutions evolved for register allocation problems or type checking mechanisms provides insight into design trade-offs. Modern LLVM architectures become more comprehensible when viewed as iterations of these foundational ideas.

Avoid common pitfalls like over-indexing on single resources or neglecting memory management aspects. Schedule weekly code reviews with peers to maintain accountability. Utilize spaced repetition systems for retaining key algorithms – flashcards for Knuth’s LR parsing or Aho’s substring matching work particularly well.

Ultimately, compiler mastery emerges from oscillating between microscopic code examination and macroscopic architectural thinking. Pair each chapter read from the Dragon Book with hands-on experiments, using theory to explain experimental results and vice versa. This dialectical process transforms opaque compilation phases into an intelligible mental model of how high-level logic becomes machine-executable truth.