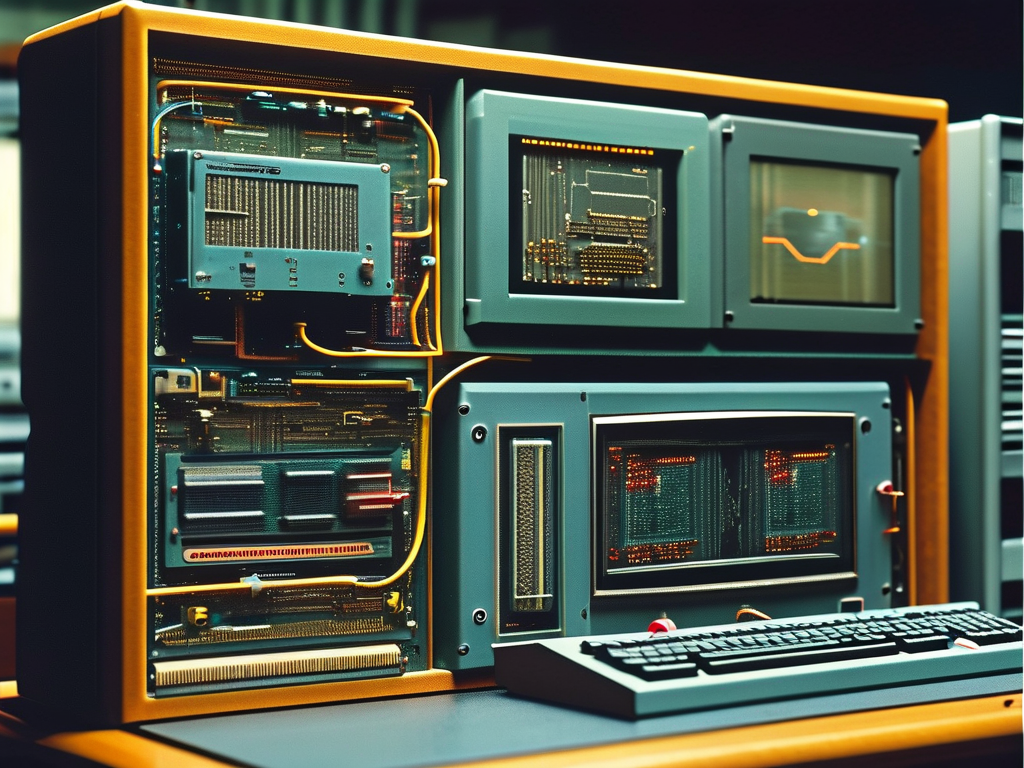

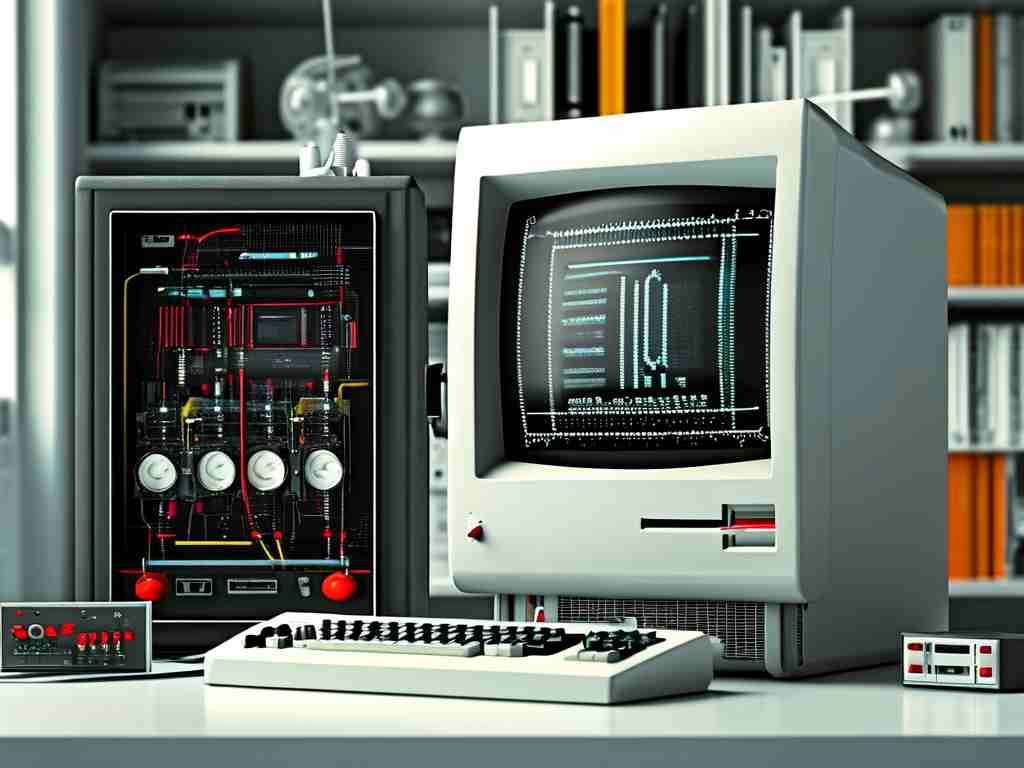

The earliest electronic computers developed in the 1940s and 1950s relied on innovative yet primitive methods to manage memory and perform calculations. Unlike modern systems with semiconductor-based RAM, first-generation computers utilized physical mechanisms to store and retrieve data, requiring engineers to adopt creative approaches for memory allocation and computation.

One of the most notable memory technologies of this era was delay line memory, which used acoustic waves traveling through a liquid medium like mercury. Data was represented as pulses of sound, with precise timing required to "capture" bits as they cycled through the system. For example, the UNIVAC I employed mercury delay lines capable of storing up to 1,000 bits. Programmers had to account for the inherent latency in these systems when designing algorithms, as accessing specific data depended on synchronizing with the wave's circulation period.

Another method involved magnetic drum memory, where data was stored on rotating cylinders coated with ferromagnetic material. The IBM 650, introduced in 1954, used a drum spinning at 12,500 RPM with read/write heads positioned fractions of an inch from the surface. Calculations required timing instructions to coincide with the drum's rotation—a process akin to catching a specific train car as it whizzes past a station. Engineers often optimized programs by arranging instructions in the order they appeared on the drum to minimize wait times.

Memory calculation in these systems followed a cycle-based paradigm. Each operation—whether fetching data or executing an instruction—depended on rigidly timed intervals dictated by the hardware's physical limitations. Programmers manually allocated memory addresses while accounting for:

- Storage medium latency (e.g., 0.5 ms per drum revolution)

- Signal propagation delays in vacuum tube circuits

- Mechanical wear affecting component reliability

A practical example emerges in the ENIAC (1945), which used function tables with rotary switches for memory. To calculate artillery trajectories, technicians physically reconfigured patch cables and switches—a process taking days. While not "memory" in the modern sense, this illustrates how computation and storage were deeply intertwined through hardware manipulation.

First-generation systems also faced challenges in error correction. Electrostatic tubes in Williams-Kilburn memory (used in the Manchester Baby, 1948) required constant refreshing to prevent charge leakage. Engineers implemented parity checks and redundancy protocols, laying groundwork for modern error-detection algorithms.

The transition to binary representation marked a critical evolution. Early machines like the Harvard Mark I (1944) used decimal wheels, but later systems standardized binary coding to align with vacuum tube logic states (on/off). This shift simplified memory calculations by reducing conversion overhead between numeric systems.

Despite their limitations, these pioneering approaches established foundational concepts still relevant today. The principle of memory hierarchy—balancing speed, capacity, and cost—originated from the contrast between fast but small delay lines and slower but larger magnetic drums. Similarly, the concept of clock cycles as a coordination mechanism persists in modern CPUs, albeit at nanosecond rather than millisecond scales.

In retrospect, the ingenuity of early computer scientists shines through their solutions to memory challenges. They engineered workarounds like:

- Optimal instruction sequencing to match storage medium rotation

- Modular memory units for expandability (e.g., adding delay line modules)

- Hybrid systems combining multiple memory types for specialized tasks

These innovations not only advanced computing but also influenced fields like telecommunications and control systems. The lessons learned from first-generation memory constraints continue to inform low-level programming and embedded system design, proving that technological progress often builds on the creative problem-solving of predecessors.