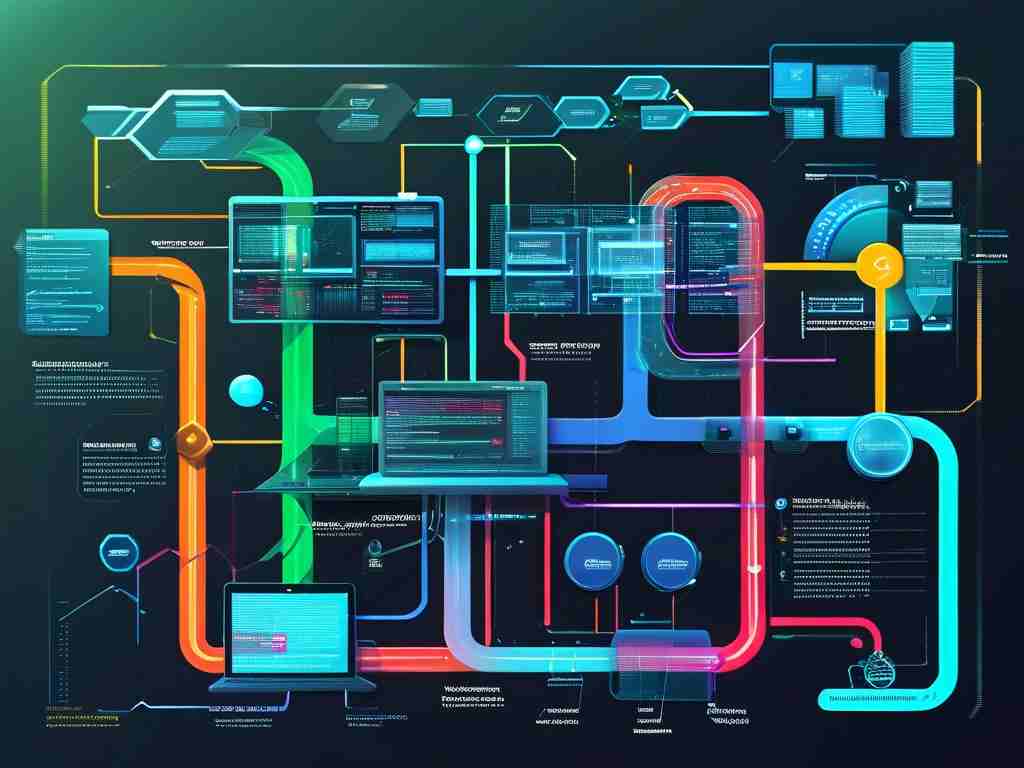

In modern cloud computing architectures, Server Load Balancer (SLB) technology plays a pivotal role in ensuring high availability, scalability, and optimal resource utilization. This article explores the technical foundations of SLB, its operational mechanics, and its significance in distributed systems.

Fundamental Concepts of SLB

SLB operates as a traffic management layer between clients and backend servers. Its primary function is to distribute incoming network requests across multiple servers to prevent overload on any single node. By doing so, SLB enhances application responsiveness and fault tolerance. Unlike traditional hardware-based load balancers, modern SLB solutions are often software-defined, enabling dynamic adjustments based on real-time traffic patterns.

Traffic Distribution Mechanisms

At the heart of SLB lies its algorithm-driven traffic distribution. Common algorithms include:

- Round Robin: Sequentially assigns requests to servers in a cyclic order.

- Weighted Round Robin: Assigns requests based on predefined server capacity weights.

- Least Connections: Directs traffic to the server with the fewest active connections.

- IP Hash: Uses client IP addresses to determine server allocation, ensuring session persistence.

For example, a simplified Round Robin implementation might use the following logic:

servers = ["Server_A", "Server_B", "Server_C"]

current_index = 0

def get_next_server():

global current_index

server = servers[current_index]

current_index = (current_index + 1) % len(servers)

return server

Health Monitoring and Failover

SLB continuously monitors server health through heartbeat checks or HTTP/HTTPS probes. If a server fails to respond within a configured timeout, the SLB automatically reroutes traffic to healthy nodes. This process minimizes downtime and ensures seamless service continuity. Advanced systems even support gradual re of recovered servers to avoid sudden traffic spikes.

Layer 4 vs. Layer 7 Load Balancing

SLB operates at two primary OSI model layers:

- Layer 4 (Transport Layer): Makes decisions based on TCP/UDP metadata (e.g., source/destination IP/port). This approach offers low latency but lacks visibility into application-layer data.

- Layer 7 (Application Layer): Analyzes HTTP headers, URLs, or cookies for granular traffic control. While slightly slower due to deeper packet inspection, it enables content-aware routing and SSL termination.

Session Persistence Strategies

For stateful applications requiring continuous user-server affinity, SLB implements session persistence using:

- Cookie Insertion: Injects a unique session cookie into client requests

- Source IP Binding: Maps client IPs to specific servers

- SSL Session ID: Leverages encrypted session identifiers for secure affinity

Scalability and Elasticity

Cloud-native SLB solutions integrate with auto-scaling groups to dynamically adjust backend server pools. During traffic surges, new instances are automatically provisioned and registered with the SLB. Conversely, during low-demand periods, excess resources are decommissioned to reduce costs.

Security Integration

Modern SLB systems incorporate security features such as:

- DDoS mitigation via traffic rate limiting

- Web Application Firewall (WAF) integration

- TLS/SSL offloading to reduce backend server overhead

Real-World Applications

E-commerce platforms use SLB to handle flash sales by distributing millions of concurrent requests. Video streaming services leverage geographic load balancing to route users to the nearest content delivery nodes. Financial institutions rely on SLB for zero-downtime maintenance and regulatory-compliant traffic encryption.

Challenges and Optimization

While SLB significantly improves system reliability, improper configuration can lead to bottlenecks. Common pitfalls include unbalanced weight assignments, overly aggressive health check intervals, and misaligned layer 7 routing rules. Performance tuning often involves analyzing metrics like request latency, error rates, and connection churn.

As distributed systems grow in complexity, SLB continues to evolve with innovations like machine learning-driven traffic prediction and edge computing integration. These advancements promise to further refine load balancing precision while reducing operational overhead.