The rapid evolution of quantum computing has sparked curiosity about its underlying infrastructure, particularly the role of memory systems. Unlike classical computers, which rely on binary bits (0s and 1s), quantum computing memory operates through quantum bits, or qubits. These qubits exist in superposition states, enabling them to process vast amounts of data simultaneously. But what exactly constitutes quantum memory, and how does it differ from conventional memory architectures? This article delves into the mechanics, challenges, and future potential of quantum computing memory.

The Foundation of Quantum Memory

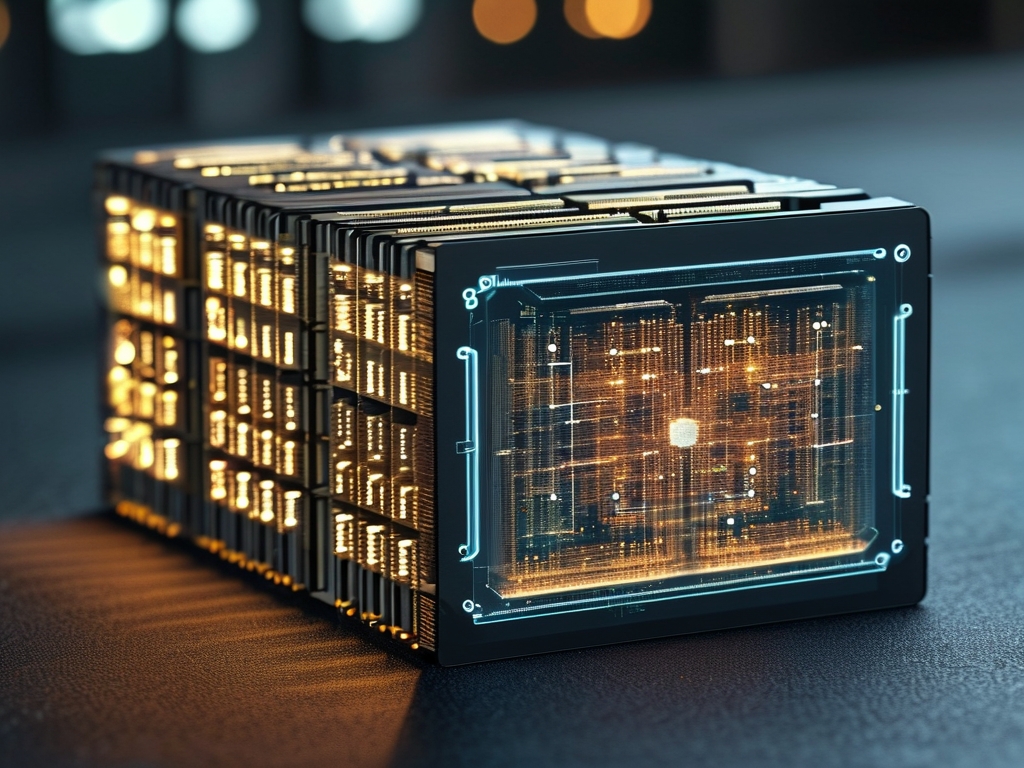

At its core, quantum memory is designed to store and manage qubits in a coherent state. Traditional memory modules, such as RAM or SSDs, store data as electrical charges or magnetic orientations. In contrast, quantum memory preserves qubits using physical systems like trapped ions, superconducting circuits, or photonic arrays. For instance, superconducting qubits are maintained at near-absolute zero temperatures to minimize decoherence—a phenomenon where qubits lose their quantum state due to environmental interference.

One groundbreaking approach involves using nitrogen-vacancy (NV) centers in diamonds. These defects in diamond lattices can trap electrons, whose spin states act as stable qubits. Researchers at institutions like MIT and IBM have demonstrated that NV-based memory systems can retain quantum information for milliseconds—a significant duration in the quantum realm. Such advancements highlight the delicate balance required to sustain qubit integrity while enabling scalable memory solutions.

Challenges in Quantum Memory Development

Building reliable quantum memory faces formidable hurdles. Decoherence remains the primary obstacle, as even minor thermal fluctuations or electromagnetic waves can disrupt qubit states. To combat this, engineers employ error-correcting codes and quantum entanglement. Entangled qubits, linked across distances, allow for redundancy—if one qubit fails, its paired counterpart can preserve the data. However, maintaining entanglement over time and space demands precision engineering and ultra-stable environments.

Another challenge lies in read-write operations. Classical memory allows rapid data access, but measuring a qubit’s state collapses its superposition, erasing quantum information. Solutions like "non-destructive measurement" techniques are being explored, where qubits are probed without fully collapsing their states. For example, a 2023 study by Google Quantum AI showcased a photonic memory system that uses weak laser pulses to read qubit states indirectly, preserving their coherence.

Applications and Future Directions

Quantum memory is pivotal for applications such as quantum networking and fault-tolerant computing. In quantum networks, memory nodes store entangled qubits to enable secure communication over long distances—a cornerstone of quantum cryptography. Companies like Toshiba and Alibaba are experimenting with metropolitan-scale quantum networks that rely on memory hubs to synchronize data transmission.

Looking ahead, hybrid systems integrating classical and quantum memory may bridge the gap during the transition to full-scale quantum computing. For instance, classical RAM could handle routine tasks, while quantum memory manages complex algorithms like Shor’s factorization or quantum machine learning models. Researchers also speculate about "quantum RAM" (qRAM), which would allow quantum algorithms to access classical data efficiently—a critical step for practical implementations.

Quantum computing memory represents both a technological marvel and a work in progress. While challenges like decoherence and read-write limitations persist, innovations in materials science and error correction continue to push boundaries. As quantum hardware matures, memory systems will play an increasingly central role in unlocking the full potential of this revolutionary technology. From securing global communications to accelerating drug discovery, the future of quantum memory promises to reshape computing as we know it.