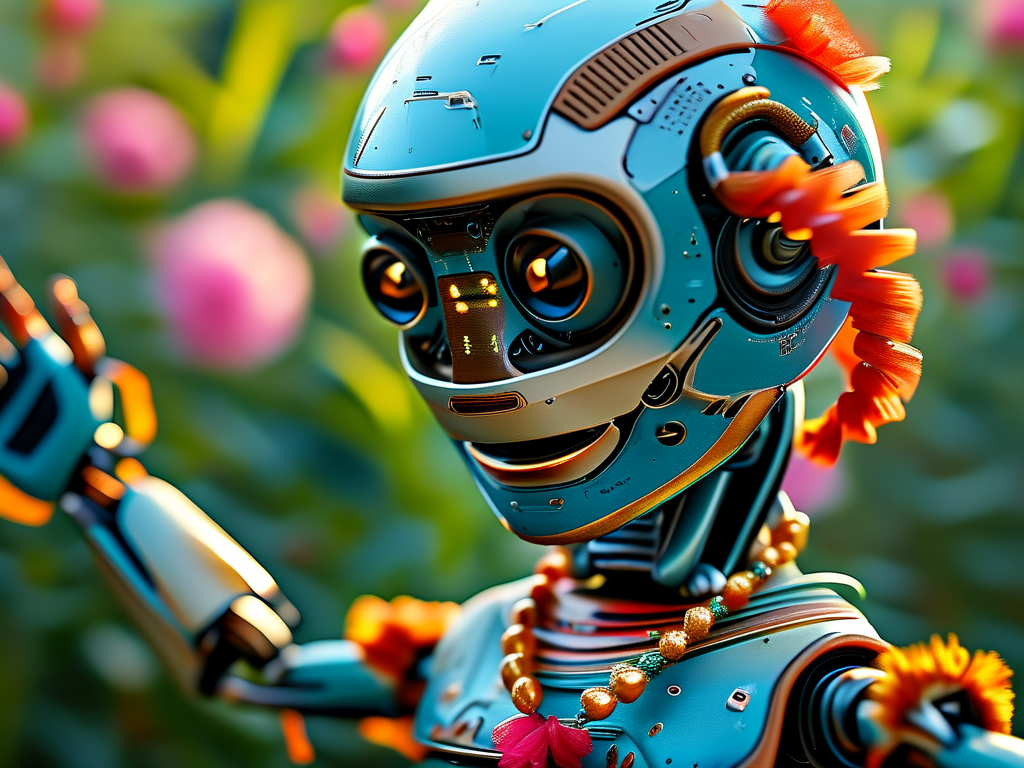

The fusion of traditional folk art and cutting-edge robotics has reached a new milestone with the emergence of robots capable of performing the Yangko dance, a vibrant Chinese folk dance known for its rhythmic movements and cultural significance. This article delves into the technical foundations enabling robots to replicate this dynamic art form, exploring the interplay of motion planning, sensor integration, and adaptive control systems.

Historical Context Meets Modern Engineering

Yangko dance, rooted in agricultural celebrations, features synchronized steps, spinning motions, and fan or handkerchief manipulations. Translating these fluid, human-like gestures into robotic actions requires addressing challenges such as balance maintenance, joint coordination, and real-time response to musical cues. Unlike industrial robots programmed for repetitive tasks, Yangko-performing machines must emulate organic flexibility while adhering to precise cultural choreography.

Core Technologies Behind the Movement

-

Kinematic Modeling and Trajectory Planning

Engineers use inverse kinematics algorithms to calculate joint angles that replicate dance postures. For instance, a robot’s arm sweep during a fan maneuver involves solving equations to determine shoulder, elbow, and wrist rotations. Trajectory optimization tools like ROS-MoveIt ensure smooth transitions between poses while avoiding mechanical singularities.# Simplified inverse kinematics example for arm positioning def calculate_joint_angles(target_position): # Solve for theta1, theta2, theta3 using geometric analysis theta1 = np.arctan2(target_position[1], target_position[0]) distance = np.sqrt(target_position[0]**2 + target_position[1]**2) theta2 = np.arccos((L1**2 + L2**2 - distance**2) / (2 * L1 * L2)) theta3 = np.arcsin(target_position[2] / L3) return [theta1, theta2, theta3] -

Dynamic Balance Control

Yangko’s signature hops and spins demand real-time balance adjustments. Robots employ inertial measurement units (IMUs) and force-sensitive resistors (FSRs) to detect shifts in center of mass. Hybrid controllers combining PID feedback and predictive models adjust motor torque to prevent falls during rapid directional changes. -

Music Synchronization

Audio processing modules analyze tempo and rhythm, mapping beat intervals to motion sequences. Machine learning models trained on Yangko music datasets enable robots to improvise movements while staying in sync with live performances.

Sensor Fusion and Environmental Adaptation

Unexpected variables like uneven stages or audience interactions require adaptive systems. LiDAR and depth cameras create 3D stage maps, while reinforcement learning algorithms allow robots to modify step patterns dynamically. For example, if a robot detects a slippery surface, it might reduce spin velocity or widen its stance for stability.

Cultural Authenticity in Robotic Choreography

Preserving the dance’s essence involves collaboration with cultural experts. Motion capture systems record professional Yangko dancers, extracting keyframe data that informs robotic movement libraries. However, engineers face dilemmas in balancing mechanical precision with artistic expression—overly rigid motions may lack the “human touch,” while excessive fluidity could strain actuators.

Future Directions and Applications

Beyond entertainment, Yangko-capable robots demonstrate advancements in human-robot collaboration. The same technologies could enhance rehabilitation robotics (e.g., guiding patients through dance-based physical therapy) or disaster response robots navigating unstable terrain. Researchers are also exploring swarm robotics configurations where multiple robots perform coordinated Yangko routines, requiring decentralized communication protocols.

In , robotic Yangko dance represents a groundbreaking intersection of heritage preservation and technological innovation. By solving unique challenges in dynamic motion control and sensory integration, engineers are not only replicating traditional art but also pushing the boundaries of what autonomous systems can achieve in unstructured environments.