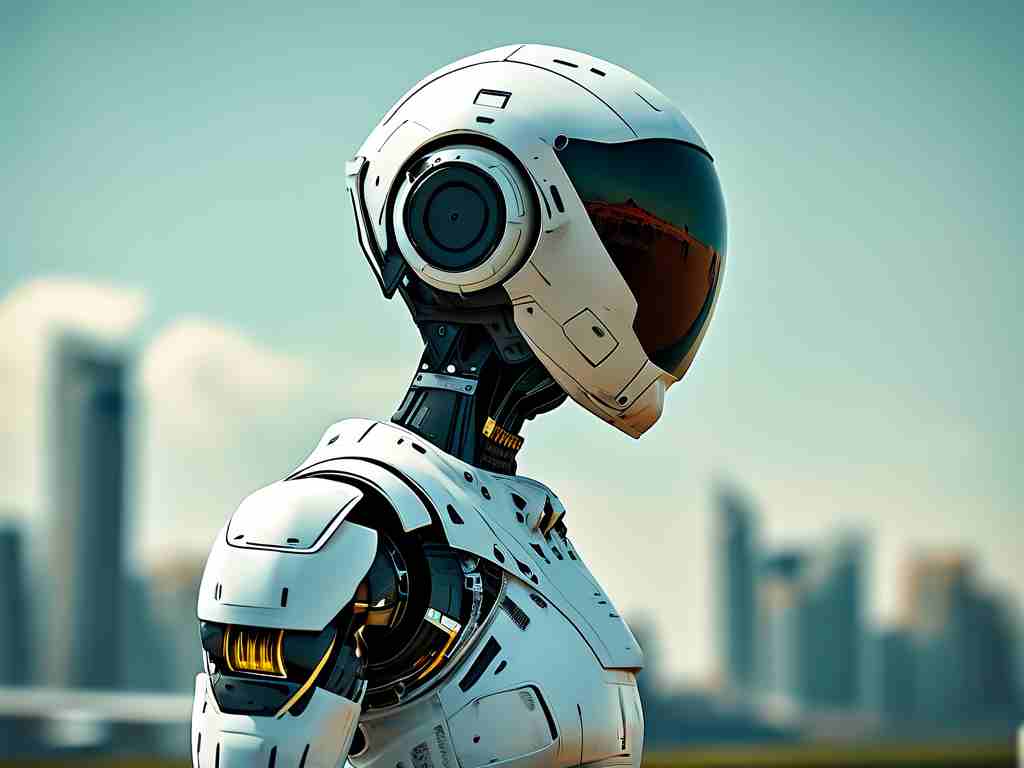

Virtual-real integration technology in robotics represents a transformative approach that merges physical robotic systems with digital simulations, augmented reality (AR), and artificial intelligence (AI). This hybrid paradigm enables robots to operate with unprecedented adaptability, precision, and contextual awareness. By bridging the gap between virtual models and real-world execution, this technology is revolutionizing industries ranging from manufacturing to healthcare.

Core Technical Principles

-

Sensor Fusion and Data Synchronization At the heart of virtual-real integration is the seamless synchronization of data between physical robots and their digital counterparts. Advanced sensors (e.g., LiDAR, cameras, and inertial measurement units) collect real-time environmental data. This information is fed into virtual models, which simulate scenarios, predict outcomes, and refine robot behavior. Machine learning algorithms process these datasets to optimize decision-making in dynamic environments.

-

Digital Twin Architecture A digital twin-a high-fidelity virtual replica of a physical robot-acts as the backbone of this technology. Through continuous data exchange, the twin mirrors the robot's state, enabling predictive maintenance, performance testing, and scenario modeling. For example, in industrial settings, a robotic arm's digital twin can simulate assembly line adjustments before deploying changes to the physical system, minimizing downtime.

-

AR/VR-Driven Interaction Augmented and virtual reality interfaces allow humans to interact with robots in immersive environments. Operators wearing AR headsets can visualize a robot's planned path overlaid onto real-world workspaces or manipulate virtual controls to adjust parameters. This bidirectional interaction enhances collaboration, training, and remote operation.

-

Edge-Cloud Computing Integration Real-time processing demands are met through edge computing (localized data handling) paired with cloud-based analytics. Edge devices handle time-sensitive tasks like obstacle avoidance, while the cloud performs resource-intensive simulations and long-term learning. This distributed architecture ensures low latency and scalability.

Applications Across Industries

-

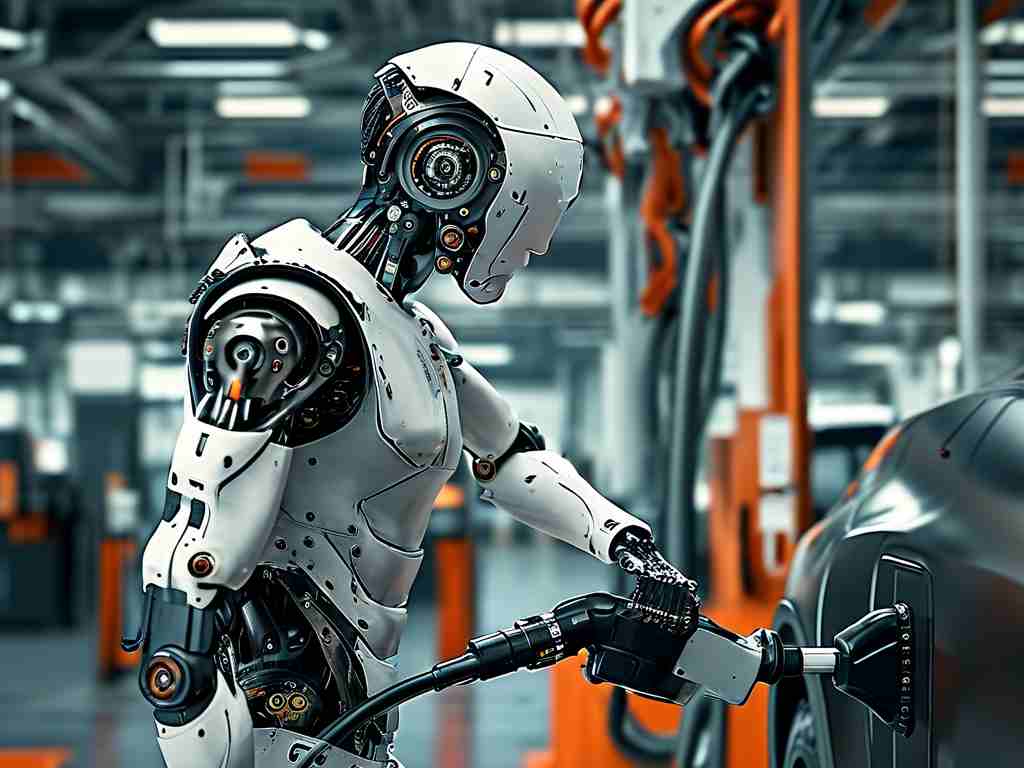

Smart Manufacturing: Companies like Tesla use virtual-real integration to simulate entire production lines. Robots pre-trained in virtual environments adapt instantly to real-world assembly tasks, reducing errors by 40% in some cases.

-

Healthcare and Surgery: Surgical robots, such as the da Vinci System, leverage AR overlays to guide surgeons during procedures. Digital twins of patient anatomy enable preoperative planning, improving accuracy in complex operations.

-

Autonomous Vehicles: Self-driving cars rely on virtual-real fusion to test navigation algorithms in simulated traffic scenarios before encountering real roads. This approach accelerates development cycles while ensuring safety.

-

Service Robotics: Hotel service robots use AR to map dynamic environments (e.g., shifting furniture layouts) and update their paths in real time, enhancing efficiency in human-populated spaces.

Challenges and Future Directions Despite its potential, virtual-real integration faces hurdles:

- Data Latency and Security: Milliseconds of delay can disrupt synchronization, requiring ultra-reliable 5G/6G networks. Additionally, securing bidirectional data flows against cyber threats remains critical.

- Computational Complexity: Balancing high-fidelity simulations with real-time performance demands breakthroughs in quantum computing and AI optimization.

- Ethical Considerations: As robots gain autonomy through virtual training, defining accountability for errors becomes legally and morally ambiguous.

Future advancements may include:

- Neuromorphic Engineering: Mimicking human neural architectures to enhance real-time learning.

- Haptic Feedback Integration: Adding touch-sensitive interfaces to AR/VR systems for richer human-robot collaboration.

- Self-Evolving Digital Twins: Models that autonomously update based on real-world performance data without human intervention.

Virtual-real integration technology is redefining the boundaries of robotics, creating systems that learn, adapt, and collaborate with human-like intuition. By harmonizing physical actions with digital intelligence, this paradigm promises to unlock new frontiers in automation, precision, and human-machine synergy. As research progresses, its impact will extend beyond industrial applications into everyday life, reshaping how we perceive and interact with technology.