In the rapidly evolving landscape of digital imaging, the intersection of embedded development and photography software has emerged as a transformative force. By integrating specialized hardware with intelligent software algorithms, developers are redefining what is possible in image capture, processing, and optimization. This article explores the technical challenges, innovative solutions, and real-world applications driving this synergy.

The Role of Embedded Systems in Modern Photography

Embedded systems, characterized by their compact size, low power consumption, and real-time processing capabilities, are ideal for photography applications. Unlike traditional software running on general-purpose computers, embedded solutions are tailored to specific hardware configurations. For instance, cameras in smartphones, drones, and IoT devices rely on microcontrollers (MCUs) or system-on-chip (SoC) designs to handle tasks like autofocus, exposure adjustment, and noise reduction. These systems prioritize efficiency, enabling features such as burst mode shooting or 4K video recording without draining battery life.

A key advantage of embedded development is the ability to optimize resource allocation. For example, ARM Cortex-M processors are widely used in camera modules to manage sensor data pipelines. By offloading tasks like image compression or facial recognition to dedicated hardware accelerators, developers reduce latency and improve performance. This hardware-software co-design approach ensures that photography software operates seamlessly within the constraints of embedded environments.

Overcoming Challenges in Embedded Photography Software

Developing photography software for embedded systems presents unique hurdles. One major challenge is balancing computational complexity with limited processing power. High-resolution image processing-such as HDR merging or AI-based scene detection-requires significant computational resources. To address this, developers employ techniques like algorithmic optimization, fixed-point arithmetic, and parallel processing. For instance, OpenCV libraries optimized for embedded platforms enable real-time edge detection or object tracking even on low-power devices.

Another critical consideration is memory management. Embedded devices often have restricted RAM and storage, making it difficult to handle large image files. Solutions include leveraging lossless compression algorithms (e.g., LZ4) and implementing efficient buffer management strategies. Additionally, firmware updates over-the-air (FOTA) ensure that devices can receive performance improvements without hardware replacements.

AI and Machine Learning at the Edge

The integration of AI into embedded photography software has unlocked unprecedented capabilities. TinyML frameworks, such as TensorFlow Lite for Microcontrollers, allow neural networks to run directly on edge devices. This enables features like real-time subject tracking, automated composition adjustments, and low-light enhancement without relying on cloud servers. For example, a drone's camera can use on-board AI to identify obstacles or track moving subjects while maintaining minimal latency.

Case studies highlight this innovation. Sony's A7R IV mirrorless camera uses an embedded AI chip to enhance eye autofocus accuracy, even in challenging lighting conditions. Similarly, GoPro's HyperSmooth stabilization leverages custom hardware to analyze motion data and apply corrections in real time. These advancements demonstrate how embedded AI transforms raw sensor data into professional-grade output.

Applications Across Industries

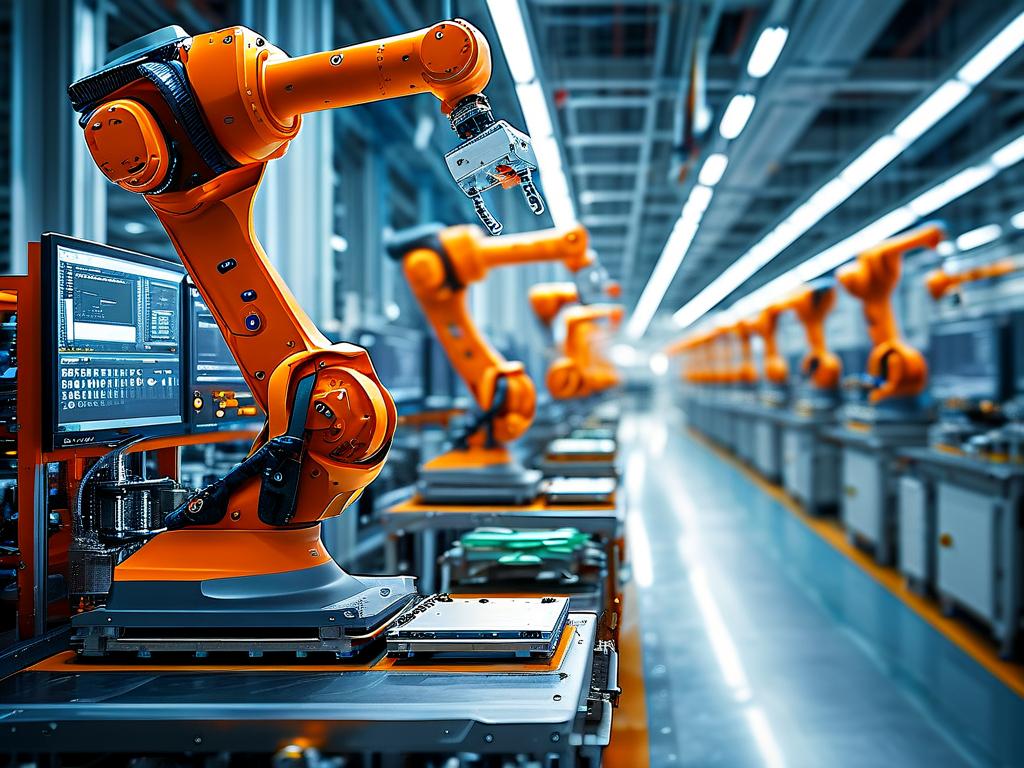

Embedded photography software is not limited to consumer electronics. In healthcare, endoscopic cameras with embedded systems provide real-time image enhancement during surgeries, improving diagnostic accuracy. Industrial inspection tools use multispectral imaging and embedded algorithms to detect defects in manufacturing lines. Even automotive systems benefit; advanced driver-assistance systems (ADAS) rely on cameras with embedded software to process road conditions instantly.

A notable example is the use of Raspberry Pi-based embedded systems in DIY photography projects. Hobbyists can build custom timelapse rigs or astrophotography setups by combining low-cost hardware with open-source software like Darktable or GIMP. This democratization of technology underscores the versatility of embedded development.

Future Trends and Ethical Considerations

As embedded photography software evolves, trends like computational photography and neuromorphic engineering will gain traction. Computational photography techniques, such as synthetic aperture or light field imaging, require tight hardware-software integration to merge multiple exposures or perspectives into a single image. Neuromorphic chips, which mimic the human brain's architecture, promise even greater efficiency for tasks like pattern recognition.

However, ethical concerns arise. Embedded systems in cameras raise questions about privacy, particularly with facial recognition capabilities. Developers must prioritize data security, implementing features like on-device encryption and user consent protocols. Regulatory frameworks will also play a role in ensuring responsible innovation.

The fusion of embedded development and photography software represents a paradigm shift in imaging technology. By harnessing the power of specialized hardware and intelligent algorithms, developers are creating devices that are faster, smarter, and more accessible. As this field advances, it will continue to push the boundaries of what cameras can achieve-transforming not only how we capture moments but also how we interact with the visual world.