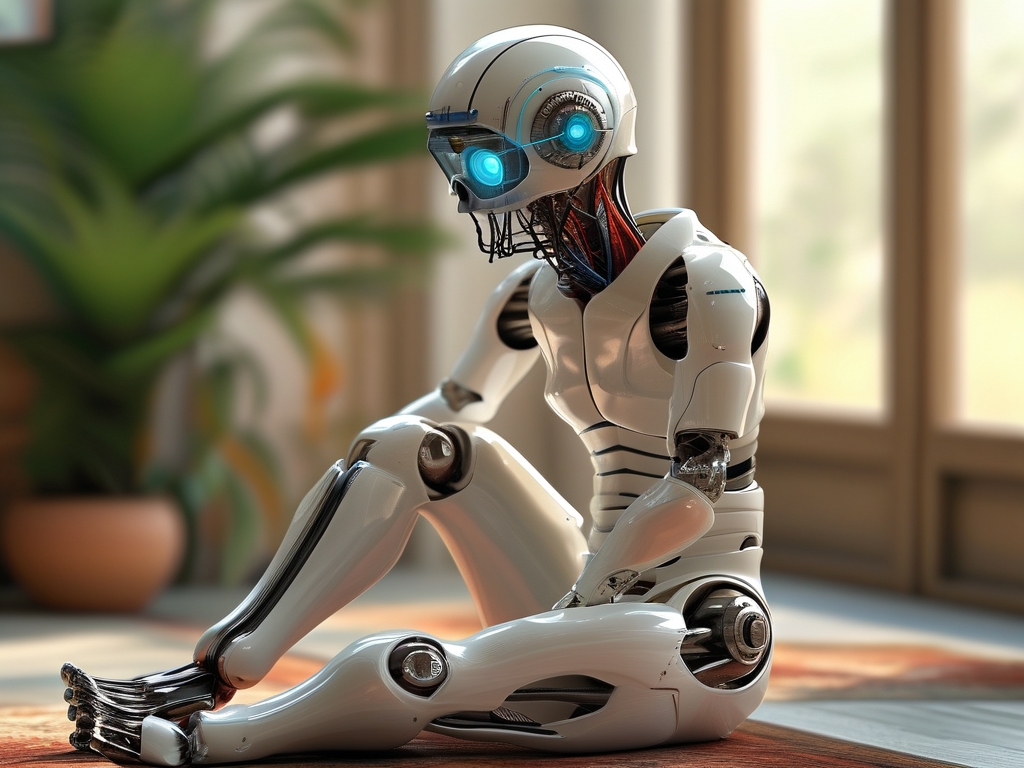

The integration of neural networks into prosthetic design represents a groundbreaking leap in biomedical engineering, offering unprecedented opportunities to restore mobility and functionality for amputees. By leveraging artificial intelligence (AI) and advanced sensor technologies, modern neural network-driven prosthetics are transforming static mechanical devices into dynamic, adaptive systems that mimic natural human movement. This article explores the principles, advancements, and future directions of neural network-based prosthetic design, highlighting its potential to redefine human-machine interaction.

The Evolution of Prosthetics: From Passive to Neural-Integrated Systems

Traditional prosthetics have long been limited by their passive nature, relying on rigid materials and basic mechanical joints. While these devices provided structural support, they lacked the ability to interpret user intent or adapt to complex environments. The emergence of neural networks has revolutionized this field by enabling prosthetics to process biological signals, learn user patterns, and execute movements with precision.

Neural networks—computational models inspired by the human brain—excel at recognizing patterns in data. When applied to prosthetics, they analyze electromyographic (EMG) signals from residual muscles or neural impulses from peripheral nerves. These signals are decoded in real time to predict intended movements, such as grasping, walking, or rotating a wrist. For instance, a below-elbow amputee can now control individual fingers on a prosthetic hand simply by imagining the motion, thanks to neural network algorithms trained on millions of movement-related data points.

Key Components of Neural Network Prosthetic Systems

- Biosignal Acquisition: High-density EMG sensors or implanted neural interfaces capture electrical activity from muscles or nerves. These signals are filtered and amplified to reduce noise.

- Machine Learning Models: Convolutional neural networks (CNNs) or recurrent neural networks (RNNs) process the biosignals to classify intended movements. Supervised learning techniques train these models using datasets of labeled movements.

- Actuation Mechanisms: Motorized joints and artificial tendons execute the predicted movements, often with variable stiffness to mimic natural biomechanics.

- Sensory Feedback Systems: Tactile sensors on the prosthetic transmit pressure or temperature data back to the user via haptic vibrations or neural stimulation, creating a closed-loop system.

A landmark example is the LUKE Arm, developed by researchers at MIT and the University of Utah. This prosthetic arm uses neural networks to translate residual nerve signals into 10 distinct hand and wrist motions, achieving 97% accuracy in clinical trials. Similarly, Johns Hopkins University’s Modular Prosthetic Limb employs deep learning to enable users to play piano or handle delicate objects.

Challenges in Neural Network Prosthetic Design

Despite rapid progress, several hurdles remain. Signal Decoding Accuracy is highly dependent on the quality of biosignals, which can degrade due to muscle fatigue or electrode displacement. Neural networks require vast amounts of training data, posing challenges for users with unique physiological conditions. Additionally, latency—the delay between intent and action—must be minimized to ensure seamless interaction. Current systems operate at 100–200 milliseconds, but researchers aim to reduce this to under 50 milliseconds for near-instantaneous response.

Another critical issue is user adaptation. Prosthetics must “learn” individual user patterns while allowing for gradual adjustments as muscle strength or neural pathways change over time. Hybrid models combining reinforcement learning and user feedback loops are being tested to address this.

Ethical and Accessibility Considerations

The high cost of neural network prosthetics—often exceeding $50,000—limits accessibility. Efforts to democratize these technologies include open-source AI frameworks and 3D-printed modular components. Ethical debates also surround the potential for neural data misuse, necessitating robust encryption and informed consent protocols.

Future Directions: Merging Mind and Machine

The next frontier involves direct brain-machine interfaces (BMIs). Projects like Neuralink aim to implant micron-scale electrodes in the brain to capture motor cortex signals with unparalleled resolution. When paired with neural networks, BMIs could enable prosthetics to replicate complex movements like dancing or typing without requiring residual muscle signals.

Advancements in materials science, such as self-healing polymers and stretchable electronics, will further enhance durability and comfort. Meanwhile, quantum computing could accelerate neural network training, making personalized prosthetics faster and more affordable.

Neural network-enhanced prosthetics are not merely tools but extensions of the human body, blurring the line between biology and technology. By addressing current limitations and prioritizing ethical innovation, this field promises to empower millions of amputees with lifelike mobility and autonomy. As AI continues to evolve, the dream of fully integrated bionic limbs—responsive, adaptive, and indistinguishable from natural limbs—edges closer to reality.