The rapid evolution of indoor robotics has been propelled by advancements in environment sensing technologies, enabling machines to navigate, interact, and adapt within complex human-centric spaces. Unlike traditional industrial robots confined to structured settings, modern indoor robots must interpret dynamic environments—ranging from cluttered living rooms to bustling hospital corridors. This article explores the core technologies driving this transformation, their applications, and emerging challenges.

Foundations of Indoor Environment Perception

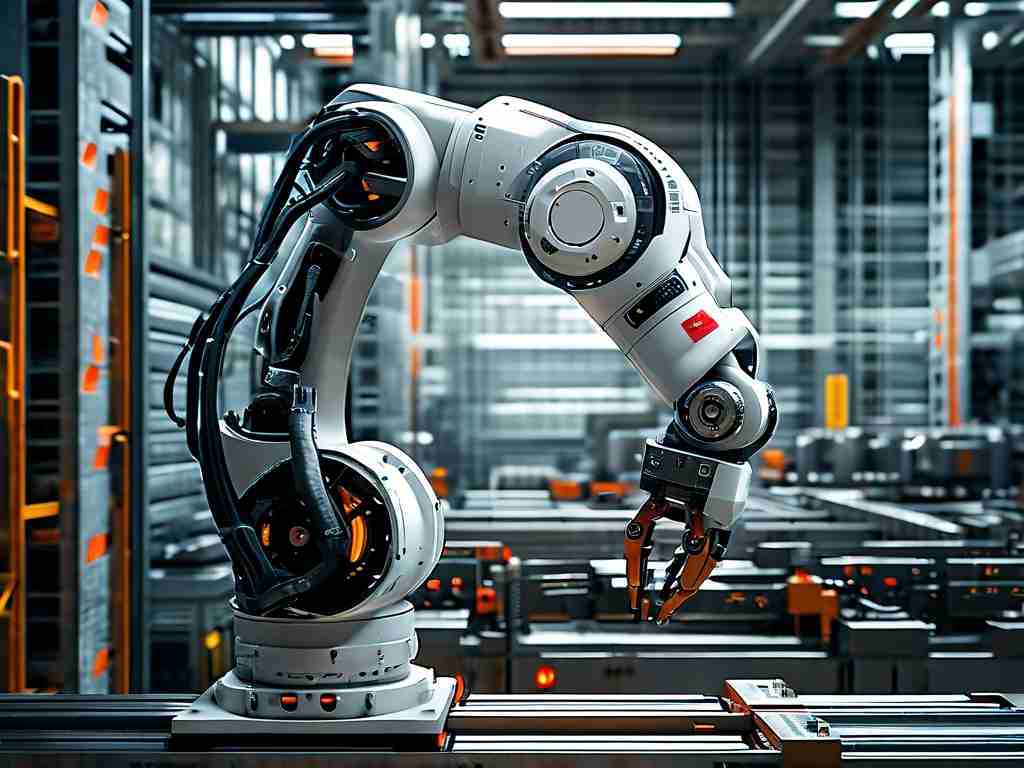

At the heart of indoor robotic perception lies sensor fusion—a combination of lidar, cameras, ultrasonic sensors, and inertial measurement units (IMUs). Lidar provides high-resolution 3D mapping, while RGB-D cameras offer color and depth data for object recognition. For instance, the ROS-based SLAM (Simultaneous Localization and Mapping) algorithm integrates these inputs to create real-time spatial models. A 2023 study by the Robotics Institute of Carnegie Mellon demonstrated that hybrid systems using lidar and thermal imaging reduced navigation errors in low-light conditions by 42%.

Overcoming Dynamic Obstacles

One critical challenge is detecting transient objects—such as moving humans or pets—without relying on preprogrammed maps. Modern solutions employ convolutional neural networks (CNNs) trained on datasets like COCO to identify and classify obstacles. Take the example of Amazon’s Astro home robot: its edge-computing system processes camera feeds locally to avoid collisions while maintaining privacy. Researchers at MIT further enhanced this approach by incorporating predictive analytics, allowing robots to anticipate pedestrian paths using Bayesian probability models.

Low-Power Optimization for Sustained Operation

Energy efficiency remains a bottleneck for battery-dependent indoor robots. Innovations like event-based vision sensors—which activate only when detecting pixel-level changes—have reduced power consumption by up to 60%. The German startup BlueBotics recently showcased a vacuum robot using this technology, achieving 8-hour runtime on a single charge. Additionally, neuromorphic computing chips, which mimic biological neural networks, enable real-time data processing with minimal energy expenditure.

Case Study: Healthcare Robotics

In hospitals, environment perception is mission-critical. The Aethon TUG robot, deployed in over 500 facilities, uses a combination of RFID tags and ultrasonic sensors to transport medications. However, newer systems like Diligent’s Moxi leverage semantic segmentation to distinguish between open doors, occupied beds, and medical equipment. During trials at Johns Hopkins Hospital, Moxi achieved 98% accuracy in avoiding unexpected obstacles during emergency scenarios.

Ethical and Technical Hurdles

Despite progress, issues persist. Sensor occlusion in crowded spaces remains problematic, as seen in warehouse robots struggling with stacked inventory. Privacy concerns also arise when cameras capture sensitive environments. A 2024 IEEE report highlighted differential privacy algorithms as a potential fix, anonymizing data at the sensor level. Meanwhile, multimodal LLMs (Large Language Models) are being tested for intuitive human-robot communication, though latency issues linger.

Future Directions

The next frontier involves “context-aware” perception, where robots infer unspoken rules—like avoiding kitchen areas during meal prep. Projects like Toyota’s Human Support Robot (HSR) are experimenting with federated learning to aggregate environmental data across devices without compromising user privacy. As 5G mmWave networks roll out, real-time cloud-based processing could offload computational heavy lifting, enabling thinner onboard hardware.

In summary, indoor robot environment sensing is a multidisciplinary race balancing accuracy, efficiency, and ethics. With breakthroughs in AI-driven sensor fusion and edge computing, the day when robots seamlessly cohabitate with humans in homes and workplaces is closer than ever.