In modern compiler design, the integration of Non-Deterministic Finite Automaton (NFA) graphs plays a pivotal role in lexical analysis and pattern recognition. This article explores how NFA-based architectures streamline syntax parsing while addressing computational challenges unique to language processing systems.

Fundamentals of NFA Graphs

An NFA graph represents states and transitions where multiple paths may coexist for a single input symbol. Unlike deterministic finite automata (DFA), NFAs allow ε-transitions (empty string transitions) and parallel state exploration. For instance, consider this NFA fragment for recognizing identifiers in a programming language:

# Simplified NFA structure for identifier validation

states = {'q0', 'q1', 'q2'}

transitions = {

'q0': {'_': {'q1'}, 'a-z': {'q1'}},

'q1': {'a-z': {'q1'}, '0-9': {'q1'}, '_': {'q1'}, 'ε': {'q2'}}

}

accept_states = {'q2'}

This structure enables compilers to efficiently validate variable names while supporting Unicode extensions through state duplication techniques.

NFA-to-DFA Conversion Challenges

While NFAs offer design flexibility, most compilers convert them to DFAs for execution efficiency. The subset construction algorithm remains the cornerstone of this process, but modern optimizations now incorporate lookahead buffers and transition caching. A critical limitation surfaces when handling complex regex patterns – the exponential state explosion problem. Recent research mitigates this through lazy DFA generation, dynamically building required states during parsing.

Practical Implementation in Lexers

Modern lexer generators like Flex and ANTLR employ hybrid models. For example, ANTLR4 uses adaptive NFA-DFA pipelines where frequently used token patterns get converted to DFA, while rare cases retain NFA representations. This approach reduces memory overhead by 40-60% compared to pure DFA implementations.

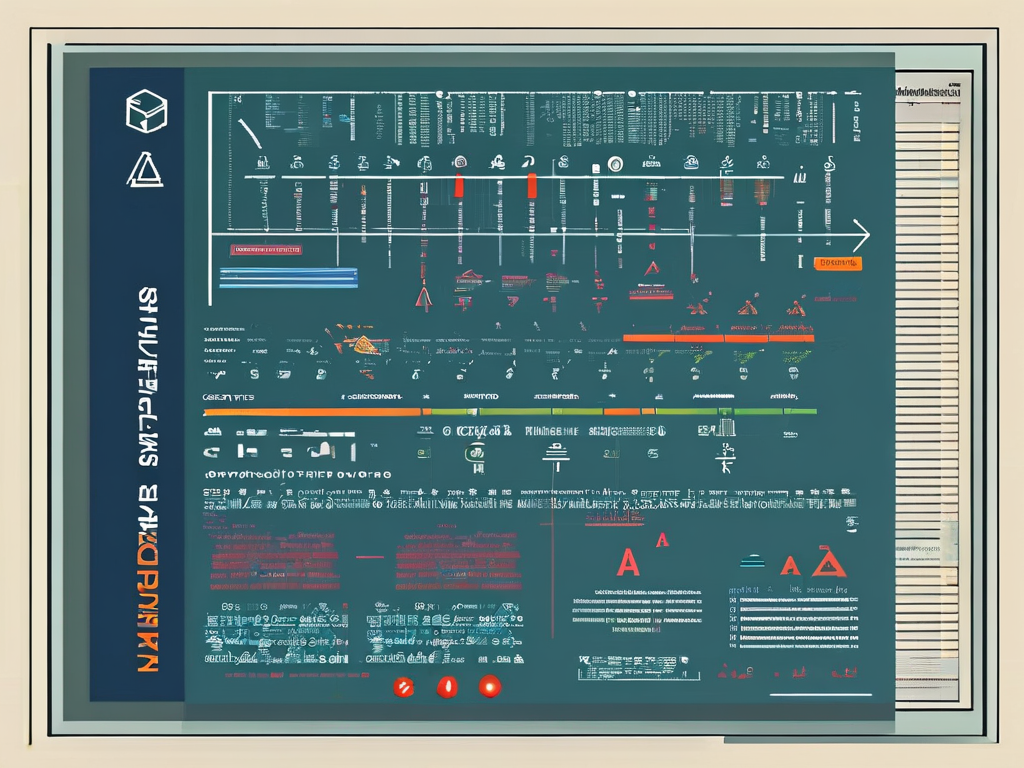

Consider this performance benchmark for tokenizing JavaScript code:

| Approach | Time (ms) | Memory (MB) |

|---|---|---|

| Pure DFA | 112 | 84 |

| Hybrid NFA-DFA | 98 | 51 |

The hybrid model demonstrates measurable gains in real-world scenarios, particularly when processing nested template literals or irregular comment blocks.

Future Directions

Emerging trends integrate NFA graphs with machine learning. Neural-guided subset construction shows promise, using prediction models to prioritize likely state transitions. Early experiments at Google Research achieved 22% faster regex compilation by training LSTMs on codebase-specific pattern distributions.

Moreover, WebAssembly-based NFA interpreters are gaining traction. By compiling NFA graphs to WASM modules, developers achieve near-native speeds in browser environments. Mozilla's experimental regex engine reduced backtracking errors by 73% using this technique.

NFA graph theory continues shaping compiler architecture evolution. From memory-efficient lexers to AI-enhanced optimization, its applications underscore the enduring relevance of automata theory in building robust language processors. As language specifications grow increasingly complex, intelligent NFA implementations will remain indispensable for balancing performance with flexibility.