The evolution of simulation robot technology has revolutionized industries ranging from manufacturing to healthcare. At its core, this field integrates advanced computational models, sensory feedback systems, and adaptive algorithms to replicate human or biological behaviors in machines. This article delves into the foundational principles driving these innovations and their real-world applications.

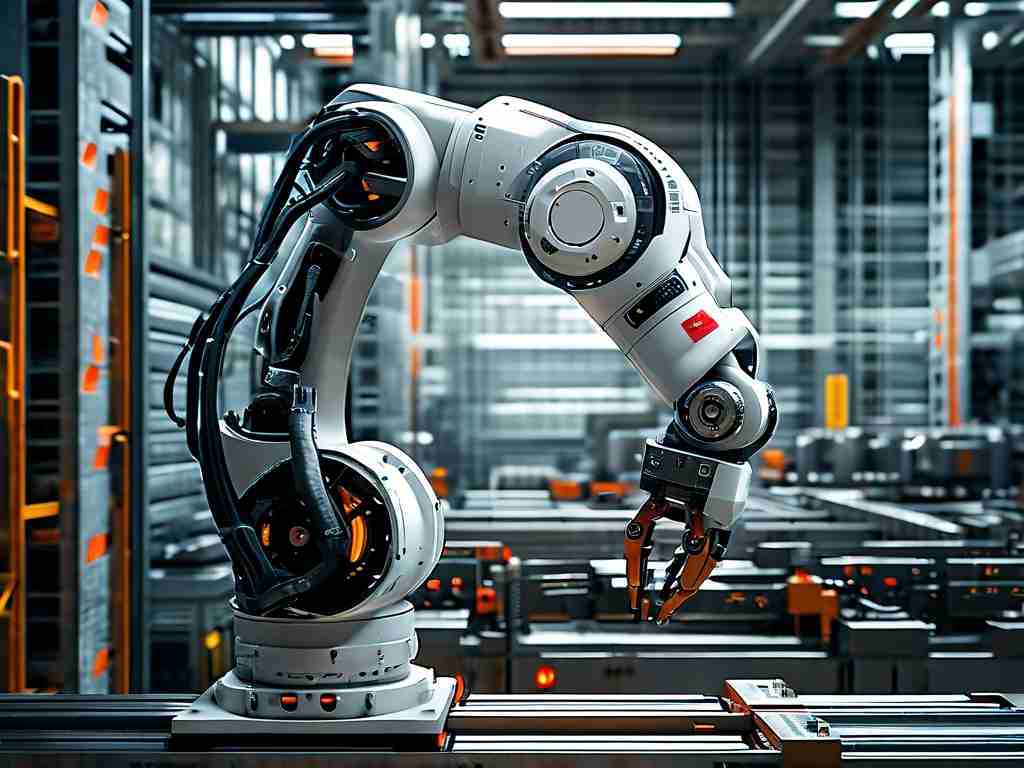

1. Computational Modeling and Dynamics

Simulation robots rely on precise mathematical models to emulate physical interactions. For instance, rigid-body dynamics equations such as Newton-Euler formulations enable robots to predict motion trajectories. These models account for variables like mass distribution, friction, and torque, allowing machines to perform tasks like lifting objects or walking without losing balance. A practical example is Boston Dynamics' Atlas robot, which uses real-time dynamic adjustments to navigate uneven terrain.

2. Sensory Feedback Integration

Modern simulation robots employ multisensor fusion to interpret environmental data. LiDAR, cameras, and inertial measurement units (IMUs) work in tandem to create a coherent spatial awareness system. Consider autonomous drones: by combining GPS data with visual SLAM (Simultaneous Localization and Mapping), they avoid obstacles while maintaining flight stability. This principle also applies to surgical robots, where force sensors provide haptic feedback to surgeons during minimally invasive procedures.

3. Adaptive Control Algorithms

Machine learning frameworks like reinforcement learning (RL) enable robots to self-optimize. For example, OpenAI’s robotic hand "Dactyl" learned to manipulate objects through trial-and-error simulations. These algorithms adjust parameters in real time, improving accuracy in unpredictable scenarios. Code snippets for RL implementations often involve Q-learning or policy gradient methods, though proprietary systems like Tesla’s Autopilot use customized neural networks.

4. Human-Robot Interaction (HRI) Design

Effective HRI requires natural communication channels. Voice recognition systems like Amazon’s Alexa integration in service robots demonstrate how NLP (Natural Language Processing) bridges human commands with machine execution. Additionally, emotion-sensing AI, such as SoftBank’s Pepper robot, analyzes facial expressions to tailor responses, enhancing user engagement in retail or caregiving contexts.

Challenges and Ethical Considerations

Despite progress, latency in sensor-actuator loops remains a bottleneck. A 2023 study by MIT highlighted that even a 10-millisecond delay can destabilize bipedal robots. Moreover, ethical debates persist regarding autonomous decision-making. Should a medical robot prioritize patient safety over procedural efficiency? Regulatory bodies like the IEEE are drafting standards to address these dilemmas.

Future Directions

Emerging trends include quantum computing for faster simulations and biohybrid systems combining organic tissues with mechanical components. Researchers at UC Berkeley recently prototyped a robot using lab-grown muscle cells, controlled via optogenetic stimulation. Such advancements could redefine rehabilitation devices or prosthetics.

In , simulation robot technology thrives on interdisciplinary collaboration—merging physics, computer science, and cognitive psychology. As algorithms grow more sophisticated and hardware miniaturizes, these machines will increasingly blur the line between artificial and organic intelligence, reshaping how humans interact with technology.