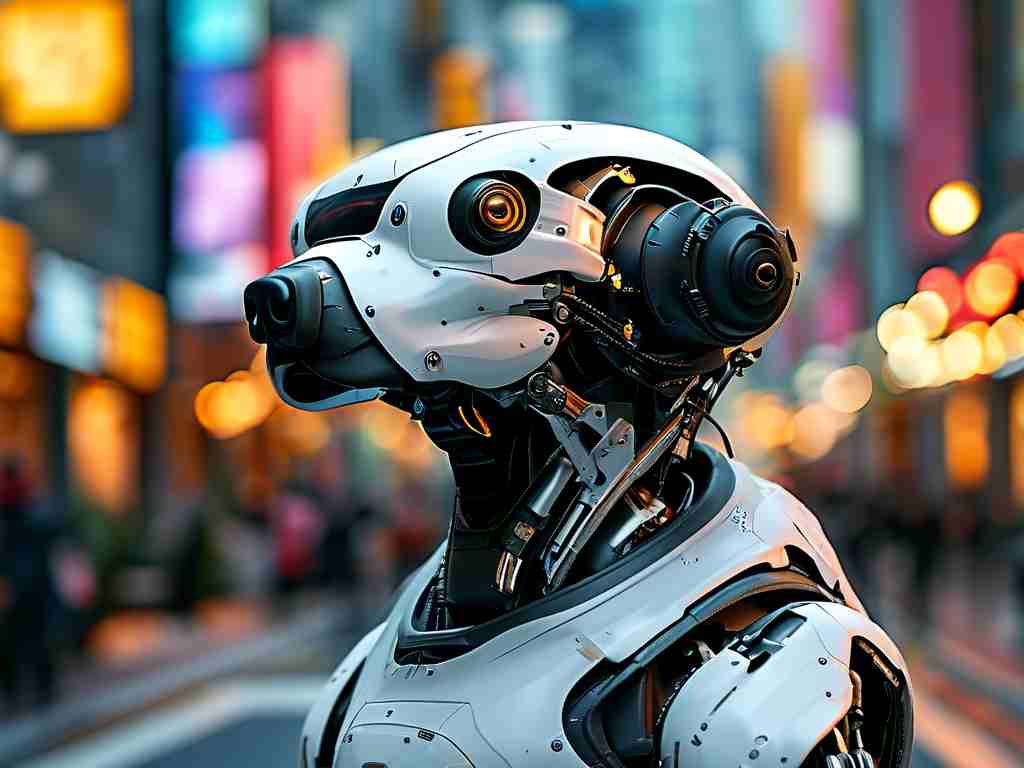

The intersection of robotics and assistive technology has reached a watershed moment with recent breakthroughs in guide-dog robot development. These autonomous devices, designed to replicate and enhance the capabilities of traditional guide dogs, are redefining mobility solutions for visually impaired individuals through cutting-edge engineering innovations.

At the core of this revolution lies a multi-sensor fusion system combining LiDAR, millimeter-wave radar, and stereoscopic cameras. Unlike earlier prototypes limited to obstacle detection, next-gen models now process environmental data at 120 frames per second, enabling real-time analysis of complex urban landscapes. This allows the robots to identify not just static barriers like walls or parked vehicles but dynamic challenges such as erratic bicycle movements and construction zone rerouting.

A standout feature is the adaptive haptic feedback interface. Engineers have developed a responsive handle system that translates navigational data into tactile cues – gentle pulses indicating directional changes, variable resistance signaling elevation shifts, and vibration patterns warning of overhead obstacles. This bidirectional communication mimics the nuanced partnership between human and service animal while eliminating the need for verbal commands in noisy environments.

Machine learning breakthroughs address one of the field's most persistent challenges: predictive pathfinding. By training neural networks on over 8,000 hours of urban mobility scenarios, developers have created systems capable of anticipating pedestrian flow patterns and traffic light sequences. During field tests in Tokyo's Shinjuku Station, prototype units demonstrated 94% accuracy in predicting crowd movements 6 seconds in advance, significantly reducing collision risks.

Material science innovations contribute to enhanced durability without compromising mobility. A proprietary graphene-reinforced polymer exoskeleton provides impact resistance equivalent to traditional guide dog harnesses while maintaining a 28% weight reduction compared to previous models. This advancement addresses critical concerns about device portability and user fatigue during extended use.

Ethical considerations remain at the forefront of development. Researchers emphasize that these robots complement rather than replace living guide dogs, particularly for individuals with allergies or space constraints. The technology also opens doors for populations underserved by animal-based solutions – a single charging station can theoretically support multiple units, dramatically reducing long-term care costs compared to training and maintaining biological service animals.

Commercialization efforts show promising momentum. Boston-based startup AuroGuide recently completed Phase III trials with 200 participants, reporting 89% user satisfaction rates for grocery store navigation tasks. Meanwhile, Shenzhen-based VisioTech has integrated facial recognition modules to help users identify familiar individuals – a feature particularly valued by testers with progressive vision loss.

Regulatory bodies are racing to establish safety frameworks. The European Union's newly published "Autonomous Mobility Aid Standards" mandates redundant braking systems and emergency beacon protocols, while Japan's Ministry of Health has initiated certification programs for AI navigation accuracy. These developments suggest impending large-scale deployment – market analysts project a $2.3 billion global market for guide robotics by 2028.

Technical challenges persist in extreme weather performance and multi-floor navigation. However, recent collaborations with autonomous vehicle companies show potential solutions. Tesla's leaked patent filings reveal adapted versions of their snow-mode traction algorithms being tested on quadruped guide robots, while Boston Dynamics' stair-climbing expertise is being leveraged through cross-industry partnerships.

The societal implications extend beyond individual users. Urban planners in Singapore are prototyping "smart curb" systems that communicate directly with guide robots, transmitting real-time updates about temporary road closures and public transit delays. This infrastructure-level integration points toward a future where assistive technologies actively reshape urban accessibility frameworks.

As these systems evolve, focus intensifies on human-centered design. Anthropologists now work alongside engineers to refine interaction paradigms – studying how varying pressure patterns in handgrips convey urgency levels or how auditory cues can be customized for cultural contexts. This multidisciplinary approach ensures the technology adapts to human needs rather than forcing behavioral changes on users.

While skeptics question the emotional value of mechanical companions compared to living dogs, developers counter with customizable personality modules. Early adopters can select from various "behavior profiles" – some preferring strictly functional guidance, others opting for occasional "playful" detours to park benches or coffee shops, mimicking a service dog's spontaneous interactions.

The road ahead remains complex, balancing technical ambitions with ethical responsibilities. Yet as prototype units begin transitional deployment in Madrid and San Francisco this quarter, one truth becomes evident: guide-dog robotics isn't merely creating smarter machines, but actively reimagining what independence means for the visually impaired community.